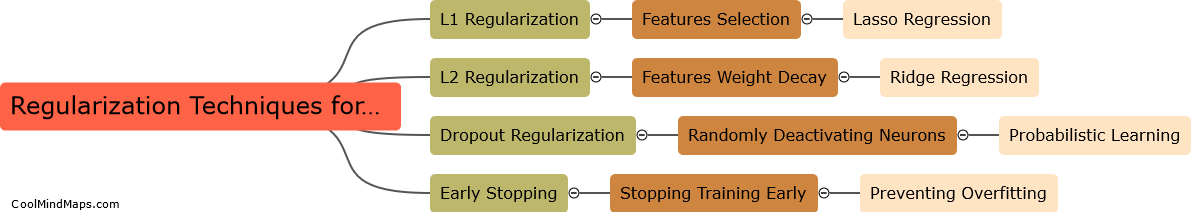

What is the concept of dropconnect?

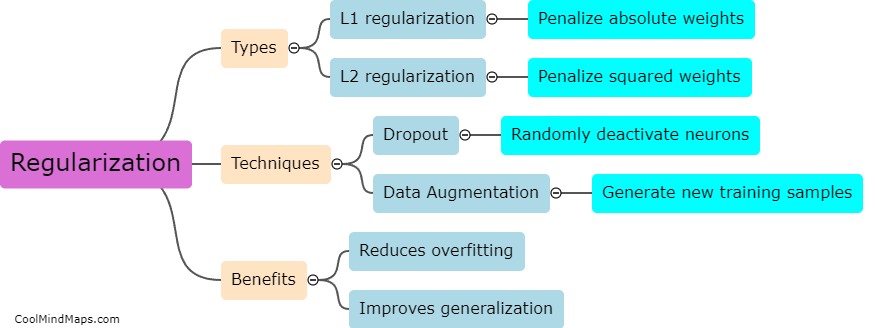

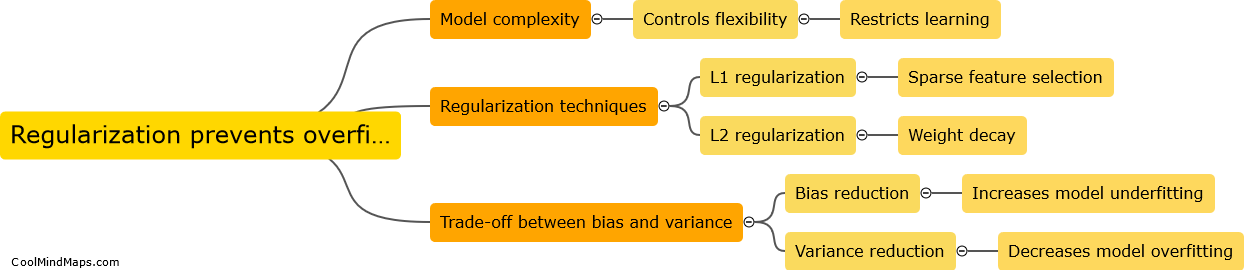

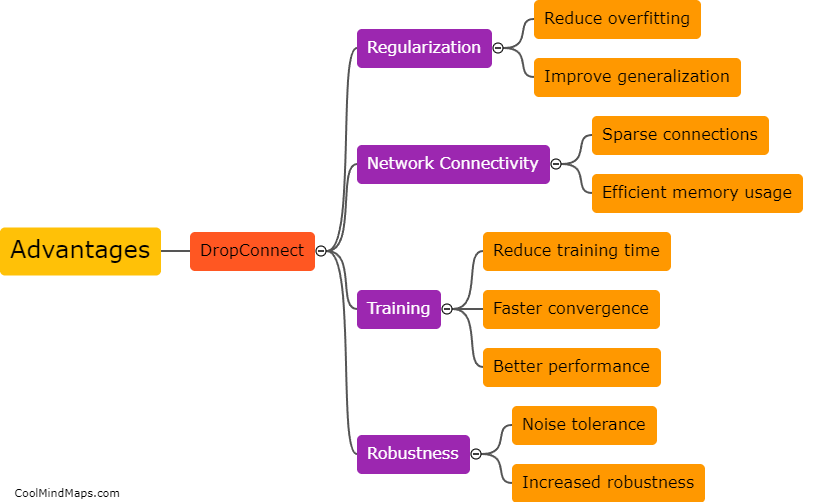

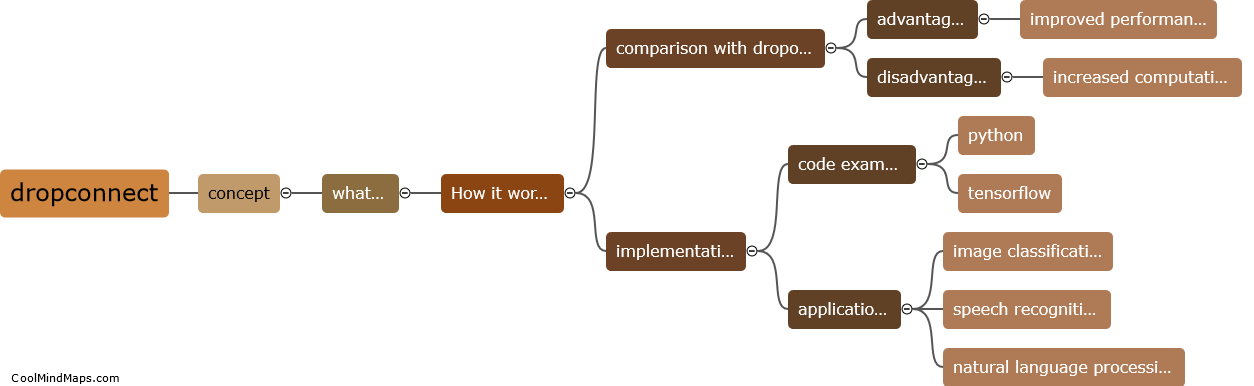

DropConnect is a regularization technique in machine learning that extends the concept of dropout, which is widely used in deep neural networks. It works by randomly dropping a fraction of the connections between the neurons during training. Unlike dropout, which drops entire neurons, DropConnect drops individual weights within the neural network. By doing so, the network becomes less sensitive to the presence of specific connections, making it more robust and less prone to overfitting. DropConnect can improve the generalization ability of a model by preventing complex co-adaptations between neurons, thus reducing the risk of overfitting and improving the model's performance on unseen data. It has been proven to be effective in various applications, such as image recognition and natural language processing.

This mind map was published on 20 August 2023 and has been viewed 102 times.