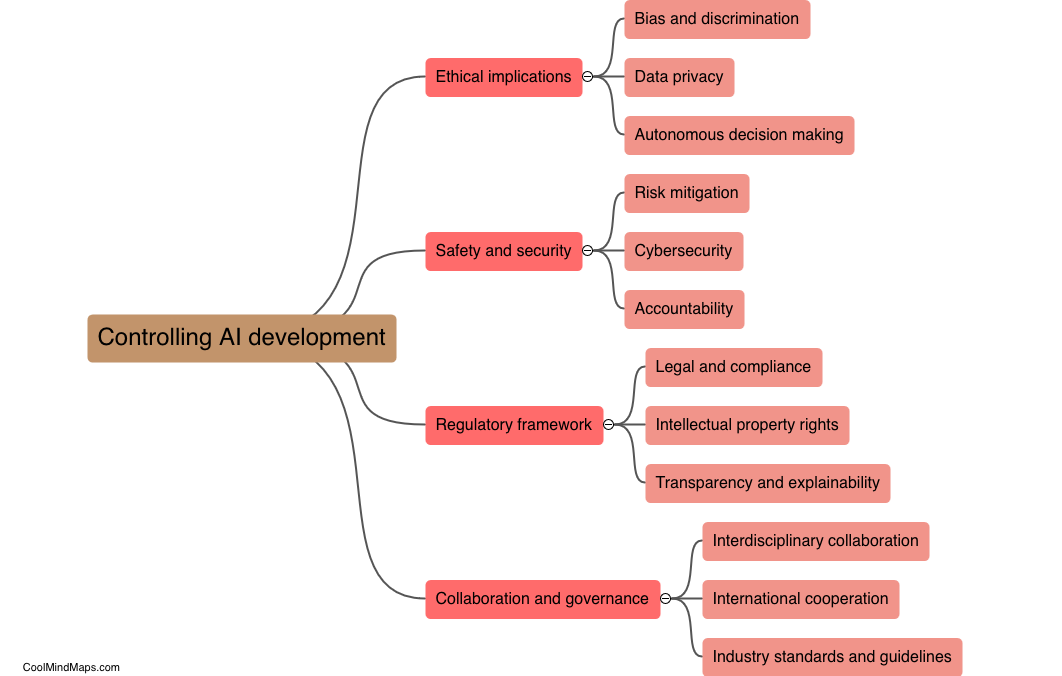

What are the key considerations for controlling AI development?

Controlling AI development involves various key considerations to ensure its safe and ethical deployment. Firstly, transparency is critical, as developers must provide clear information about the purpose, capabilities, and limitations of AI systems to prevent misuse or unintentional harm. Secondly, robust regulation and oversight are necessary to establish guidelines and standards that govern AI development and deployment. Additionally, ensuring AI systems are fair and unbiased is crucial, as bias in algorithms can perpetuate existing societal inequalities. It is also important to prioritize privacy and data protection, as AI often relies on vast amounts of personal data, and safeguards must be in place to prevent unauthorized access or misuse. Lastly, developing AI in collaboration with diverse stakeholders, including ethicists and social scientists, can help address moral dilemmas and broaden perspectives to ensure responsible and beneficial AI development for society.

This mind map was published on 3 January 2024 and has been viewed 91 times.