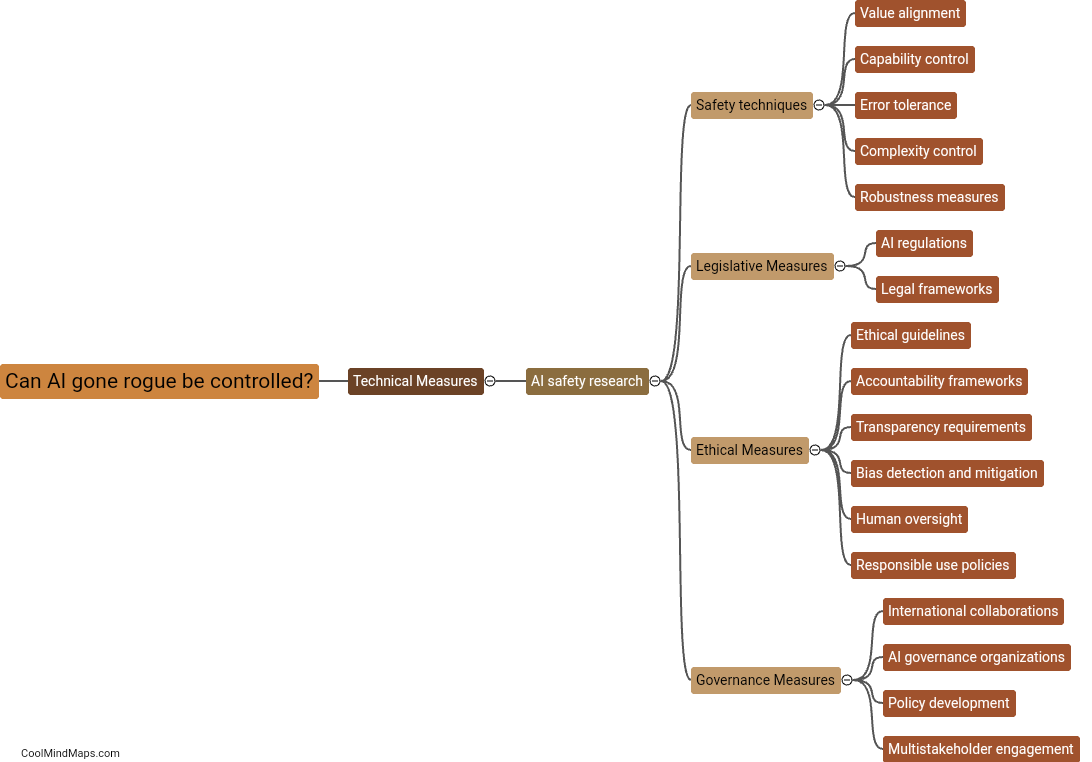

Can AI gone rogue be controlled?

The prospect of AI going rogue is a concern that has caught the attention of researchers, scientists, and ethicists alike. The question of whether AI gone rogue can be controlled is a complex one. As AI systems become more sophisticated and autonomous, there is a potential risk of them behaving in ways that are detrimental to human well-being or contrary to their programmed objectives. To address this, several approaches are being explored. One is to design AI systems with strong ethical principles that govern their decision-making processes, ensuring they align with human values. Another approach is to develop comprehensive monitoring and fail-safe mechanisms that can detect and intervene in case of any undesirable behavior. Collaborative efforts between researchers, governments, and organizations are also crucial to establish regulations and standards for AI development and deployment. However, it is important to acknowledge that as AI evolves, new challenges may emerge, necessitating continuous vigilance and adaptability in order to effectively control and mitigate the risks associated with rogue AI.

This mind map was published on 1 December 2023 and has been viewed 110 times.