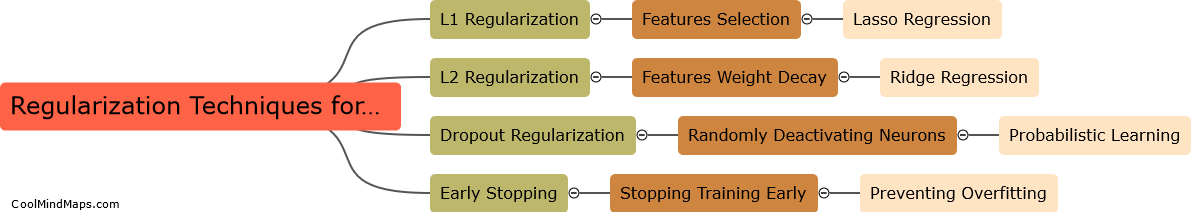

How can regularization be implemented in training a neural network?

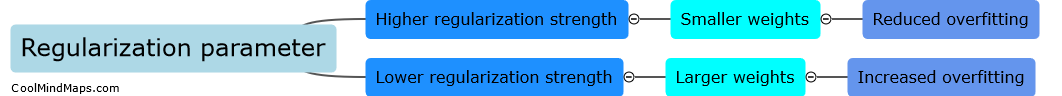

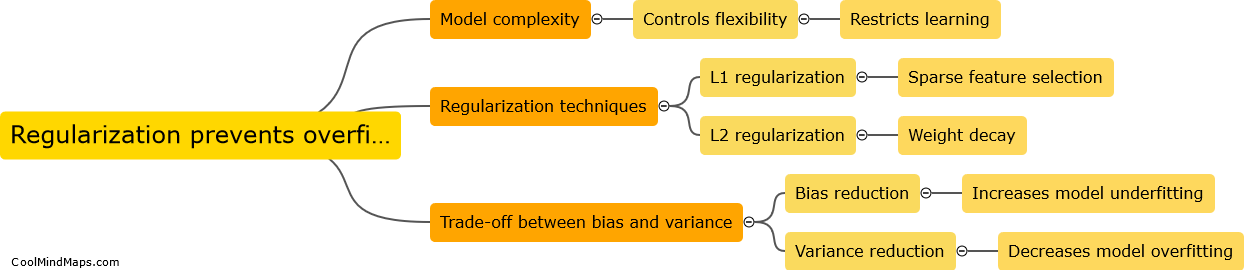

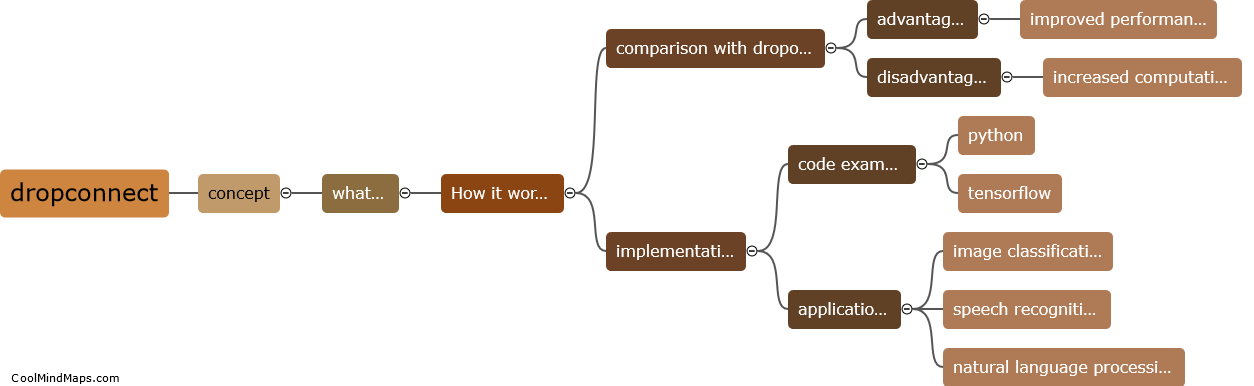

Regularization is a technique used in training neural networks to prevent overfitting and improve their generalization capabilities. It involves adding a regularization term to the loss function during the training process. One commonly used regularization technique is L2 regularization, also known as weight decay, which adds a penalty term proportional to the square of the weights to the loss function. This encourages the network to prioritize smaller weights, thus preventing the model from relying too heavily on any specific feature. Other regularization techniques, such as L1 regularization, dropout, and batch normalization, can also be employed to further improve performance and prevent overfitting. Regularization is crucial in the training of neural networks as it helps strike a balance between model complexity and generalization accuracy.

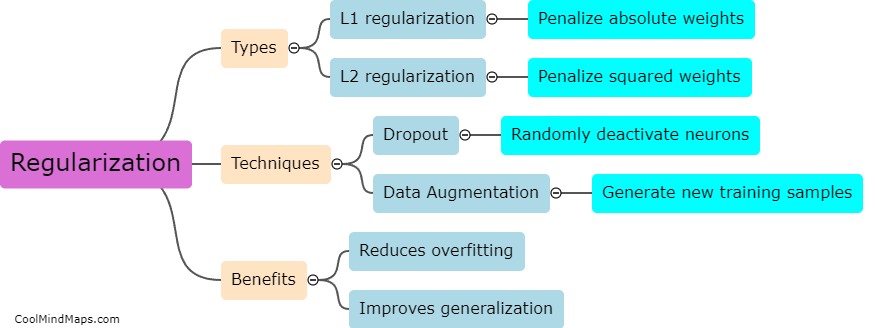

This mind map was published on 4 September 2023 and has been viewed 95 times.