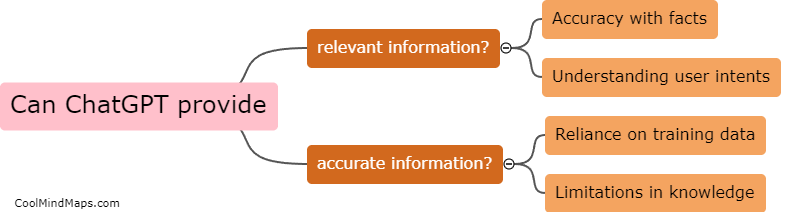

What measures are taken to ensure the safety and ethical use of ChatGPT?

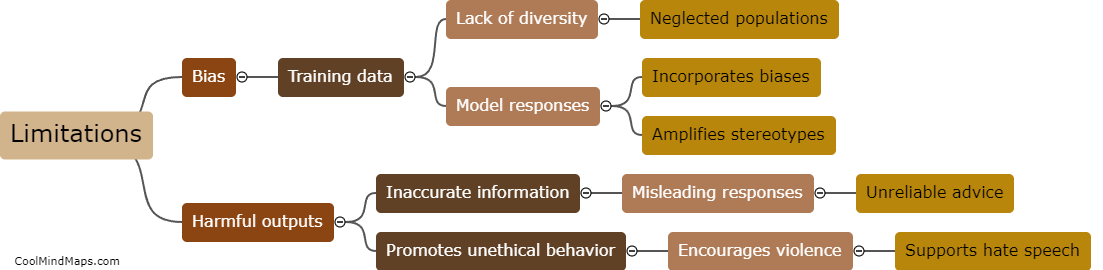

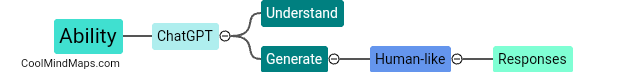

OpenAI takes several measures to ensure the safety and ethical use of ChatGPT. To begin with, they use a two-step approach. Firstly, they have a "pre-training" phase where the model is trained on a large corpus of publicly available text from the internet. However, during this phase, explicit content is filtered out to prevent any harmful biases. Secondly, they have a "fine-tuning" phase, where the model is trained on a narrower dataset generated with the help of human reviewers. OpenAI maintains a strong feedback loop with these reviewers, providing them with guidelines and addressing any questions they may have. This iterative process helps in minimizing biases and improving the model's performance over time. OpenAI also emphasizes continuous research and development to enhance the system's default behavior, enabling users to customize the system to align with their preferences while maintaining certain bounds to prevent malicious uses. OpenAI also seeks external input and solicits public feedback on topics like system behavior, deployment policies, and disclosure mechanisms, as they are committed to including as many perspectives as possible in the decision-making process. Overall, OpenAI takes proactive measures to ensure user safety and ethical considerations while reaping the benefits of ChatGPT.

This mind map was published on 1 December 2023 and has been viewed 94 times.