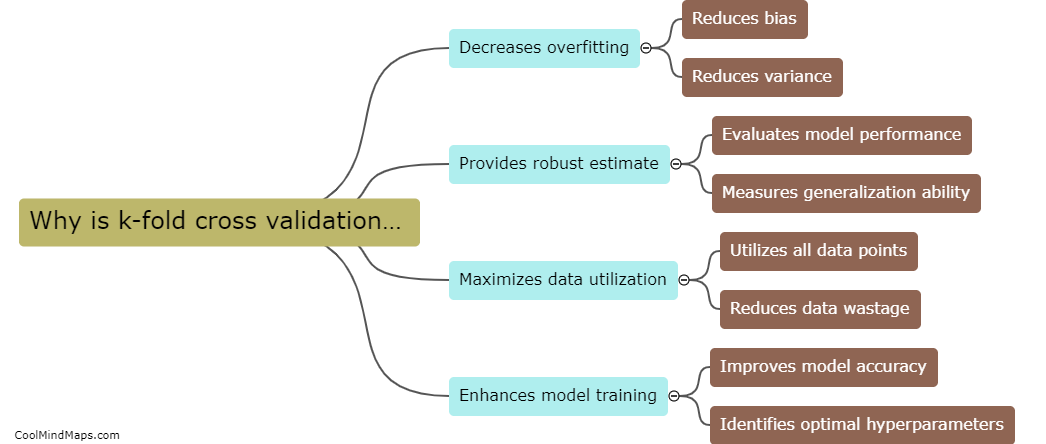

Why is k-fold cross validation used in machine learning?

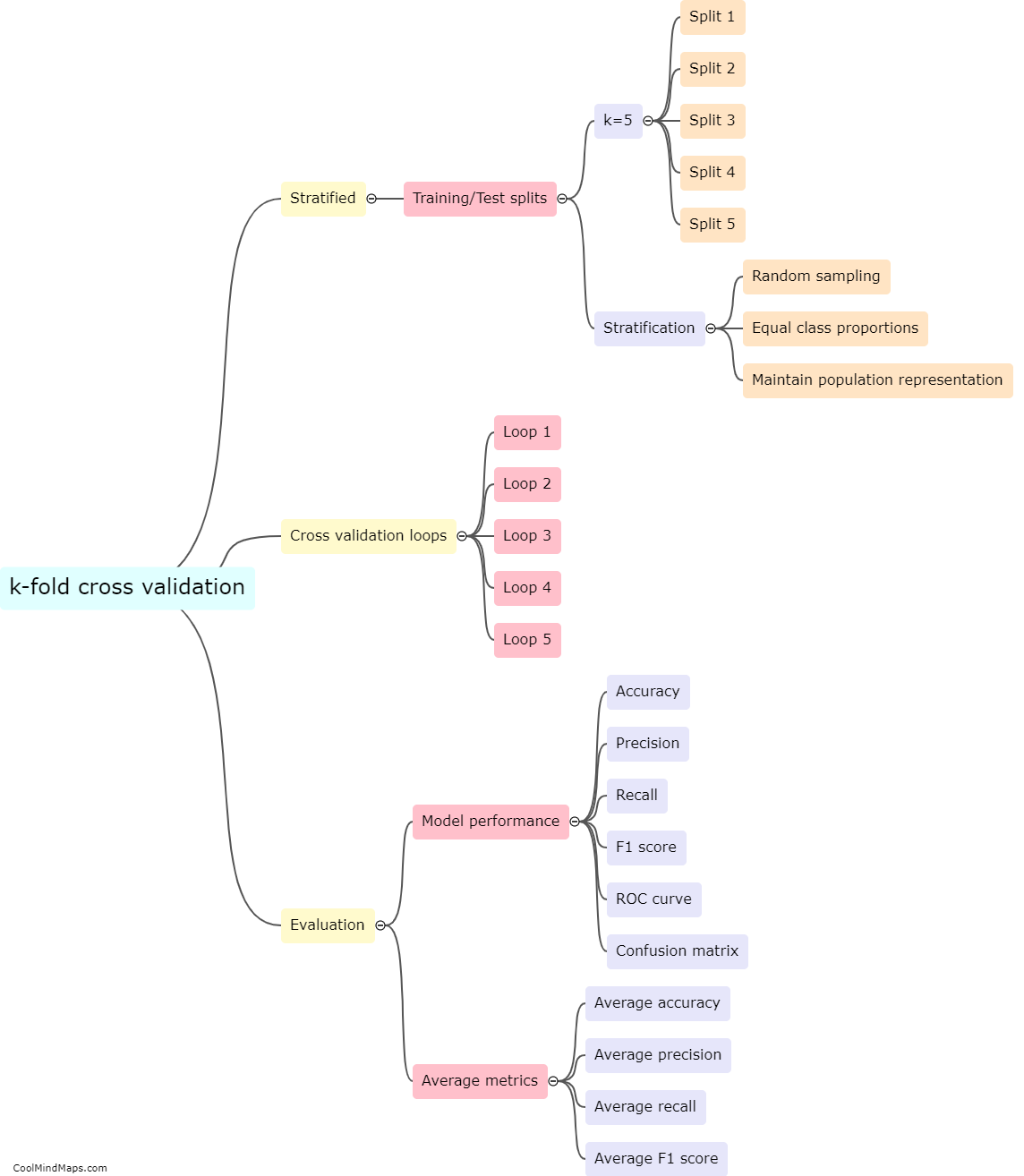

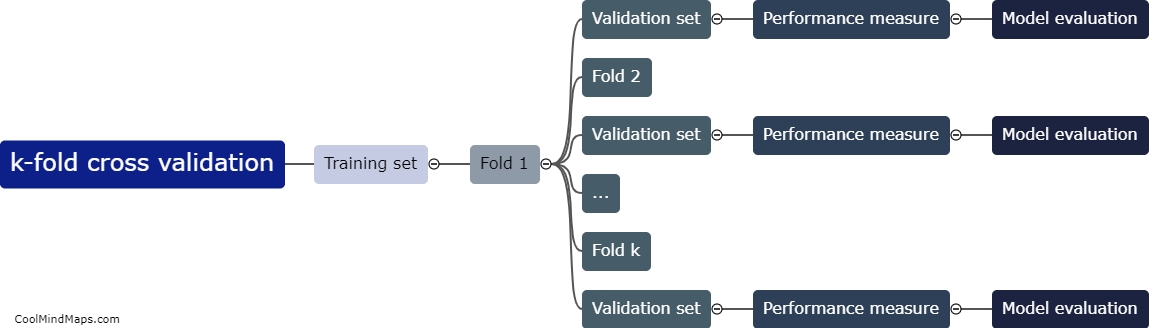

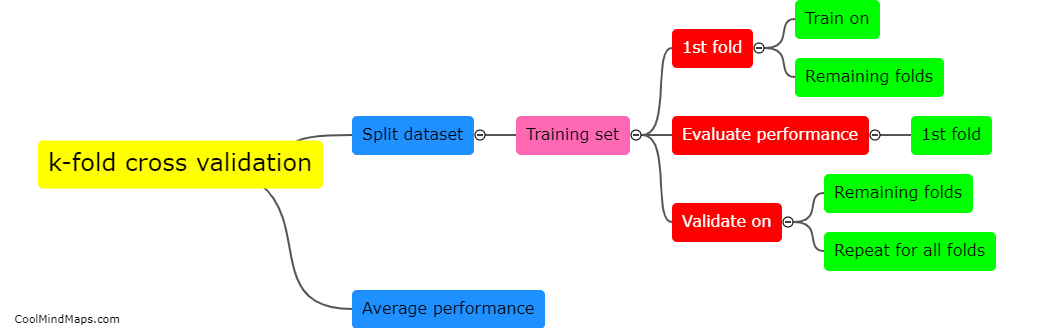

K-fold cross validation is a commonly used technique in machine learning that helps in evaluating and fine-tuning models by addressing the challenges of bias and variance. It involves splitting the dataset into k subsets or folds, where each fold acts as both a training and test set. By repeatedly training and testing models on different subsets, k-fold cross validation provides a more robust estimation of model performance compared to a single train-test split. This technique helps in detecting overfitting or underfitting issues and enables optimizing the model parameters more effectively. Consequently, k-fold cross validation aids in improving model generalization and ensures more reliable evaluation results before deploying the machine learning model in real-world scenarios.

This mind map was published on 23 January 2024 and has been viewed 90 times.