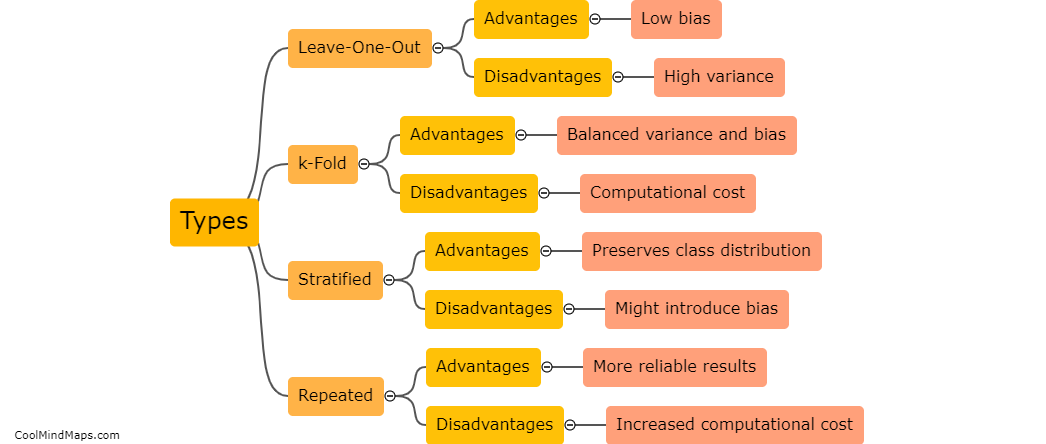

What are the different types of cross validation?

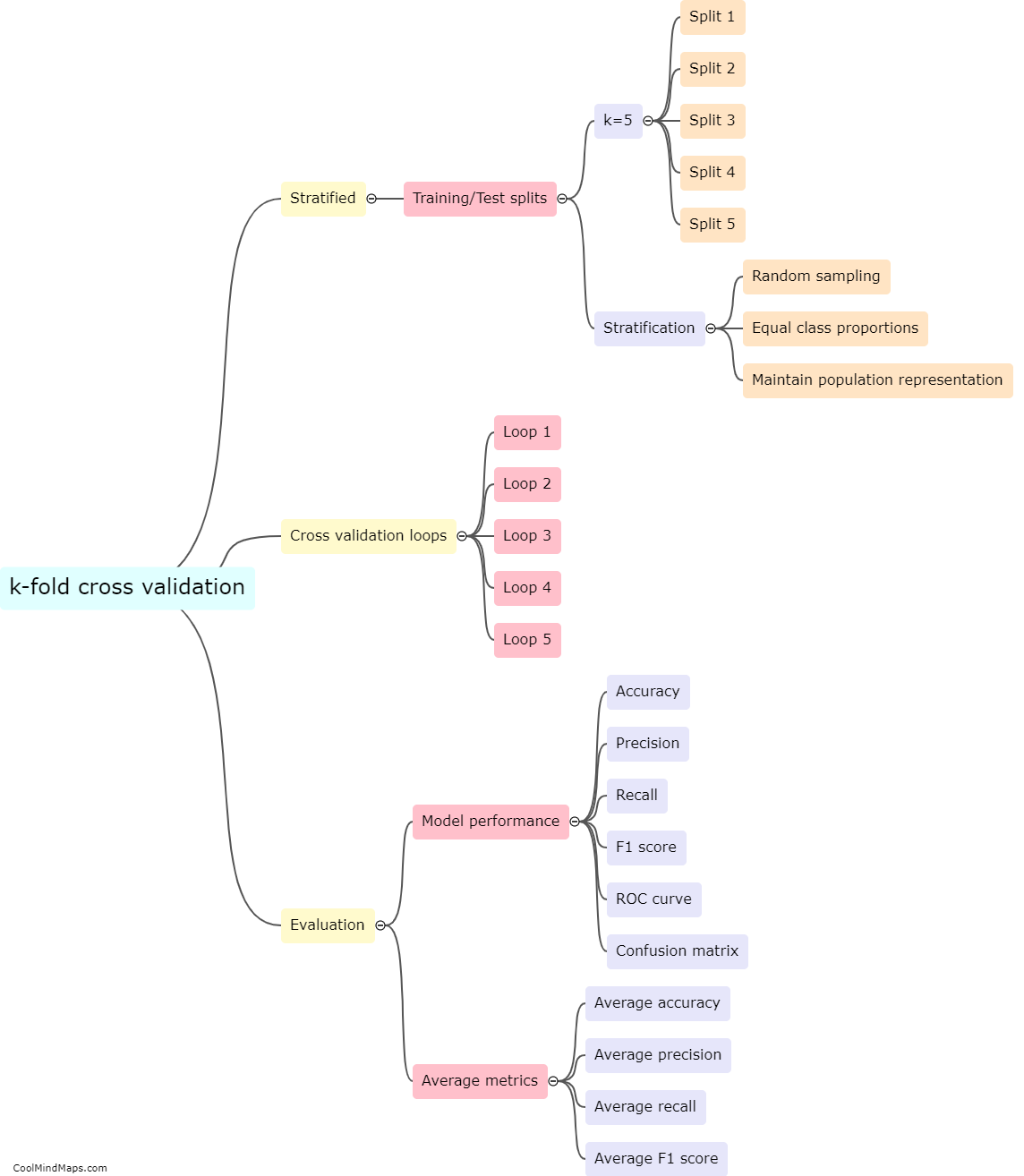

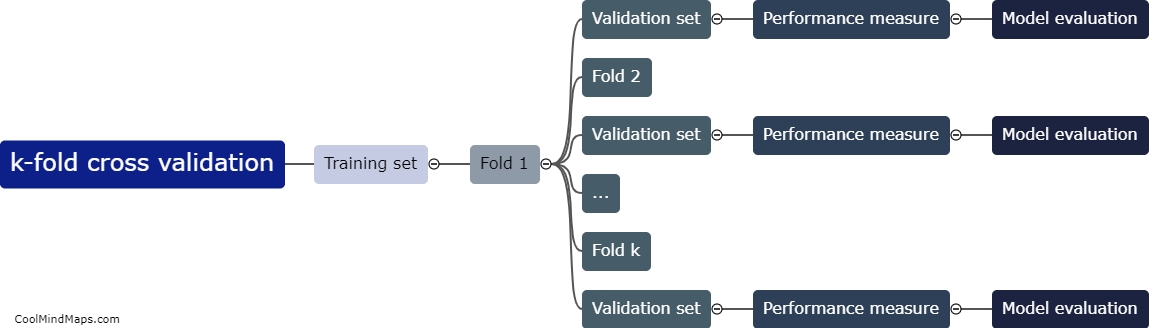

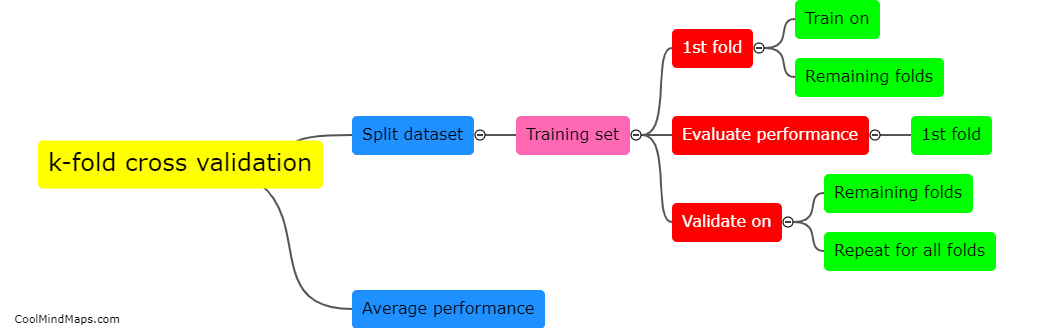

Cross-validation is a technique used in machine learning to assess the performance of a model on unseen data. There are different types of cross-validation methods that can be employed depending on the specific needs of the analysis. The most common type is k-fold cross-validation, where the dataset is split into k equal-sized folds and the model is trained and evaluated k times, each time using a different fold as the validation set. Another method is stratified k-fold cross-validation, which ensures that the distribution of target classes in each fold is representative of the overall dataset. Leave-one-out cross-validation is a variation where each individual sample acts as the validation set, and this process is repeated for all samples. Time series cross-validation is suitable for sequential data, where the data is split into sequential blocks to preserve the temporal order while evaluating the model's performance. The choice of cross-validation method depends on factors such as dataset size, balance, and the presence or absence of temporal dependence.

This mind map was published on 23 January 2024 and has been viewed 79 times.