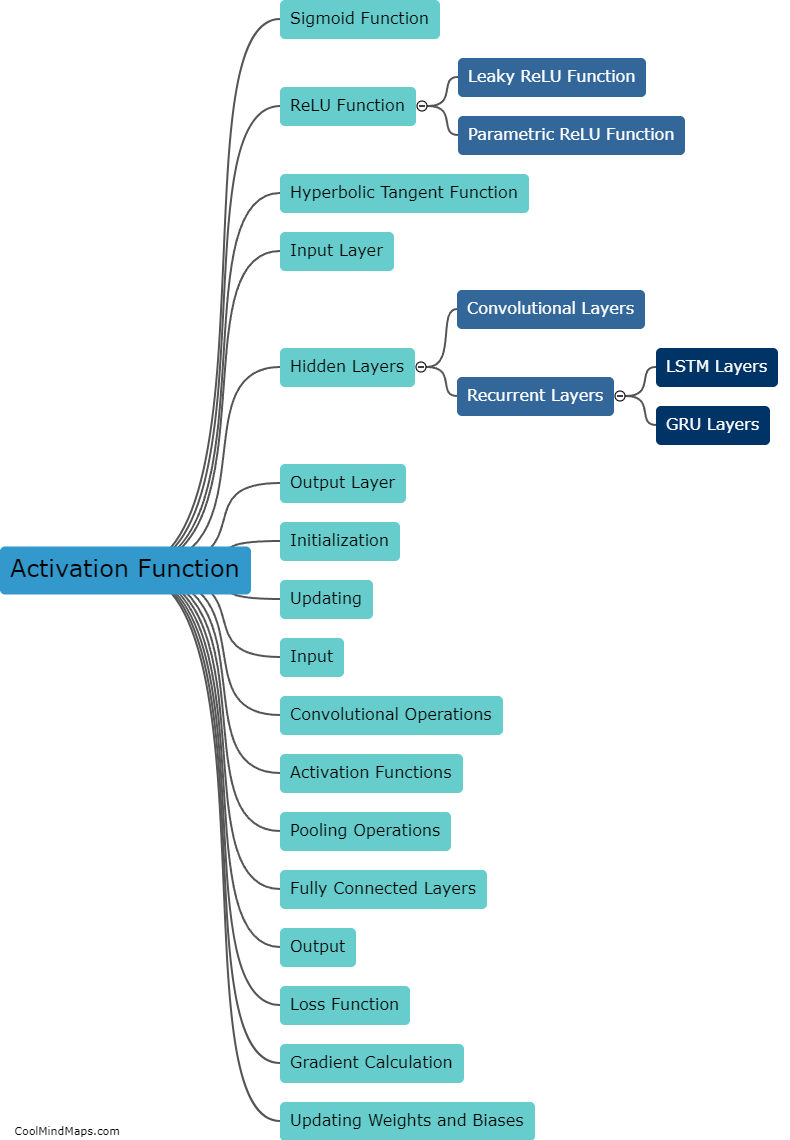

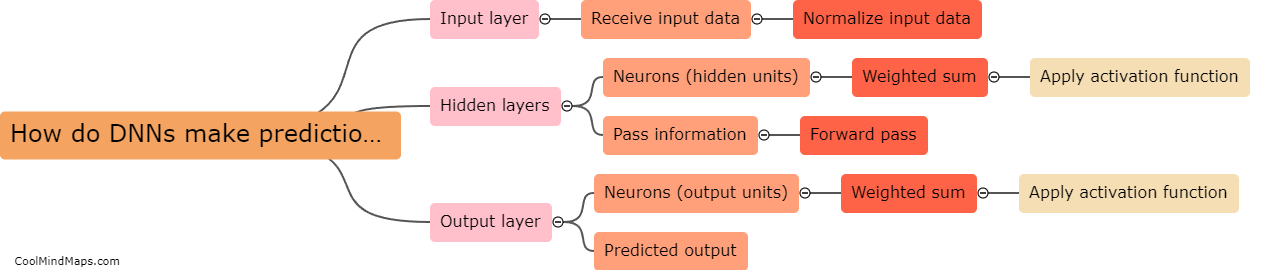

What is the architecture of a DNN?

The architecture of a Deep Neural Network (DNN) refers to the structure and arrangement of its layers. A DNN typically consists of an input layer, one or more hidden layers, and an output layer. Each layer is composed of multiple artificial neurons or nodes, which perform mathematical operations on incoming data. The input layer receives the raw data, and the hidden layers carry out intermediate computations, gradually extracting and representing more abstract features from the input. The output layer then produces the final prediction or output based on the learned representations. The connections between nodes in different layers are weighted, enabling the network to learn and adapt through a process called training. The architecture of a DNN plays a critical role in determining its capacity to learn complex patterns and solve specific tasks effectively.

This mind map was published on 10 November 2023 and has been viewed 107 times.