What are the advantages of using dropconnect in deep learning?

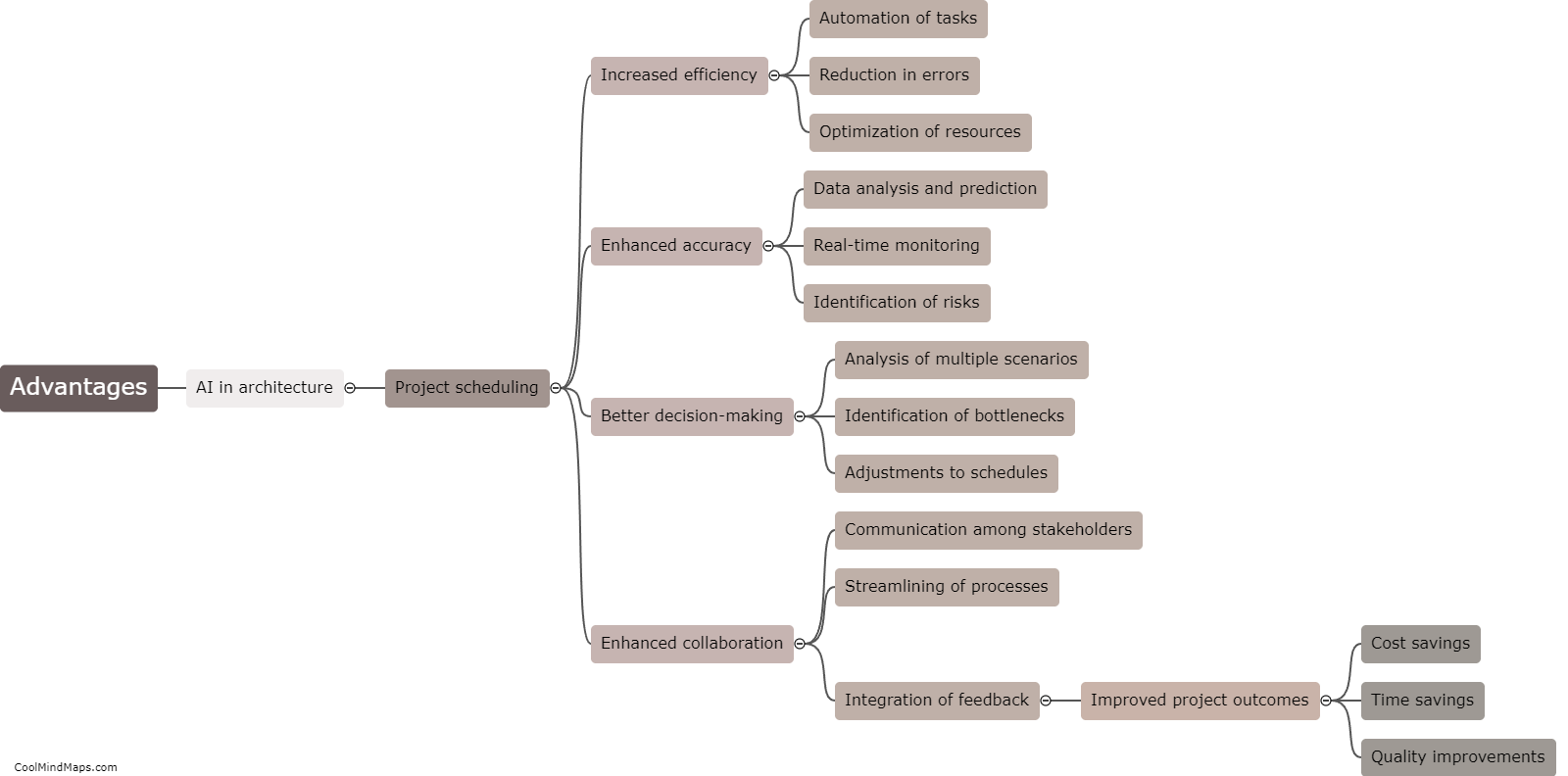

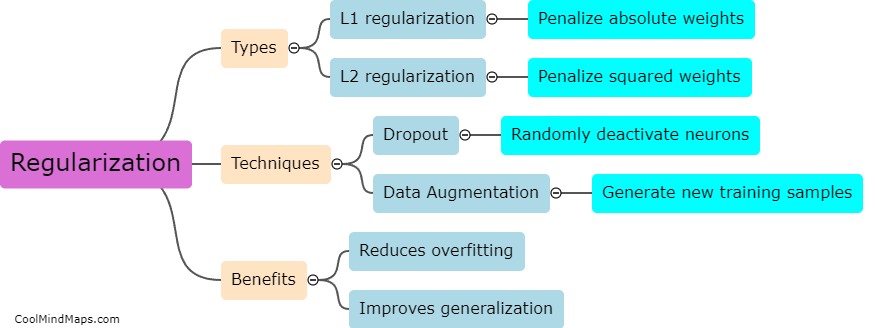

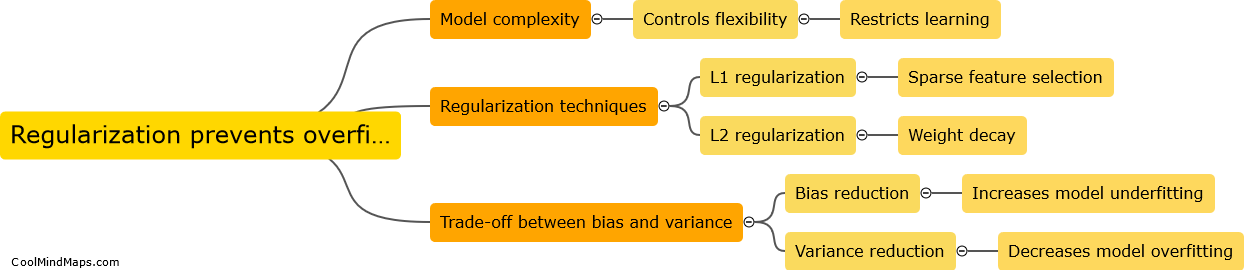

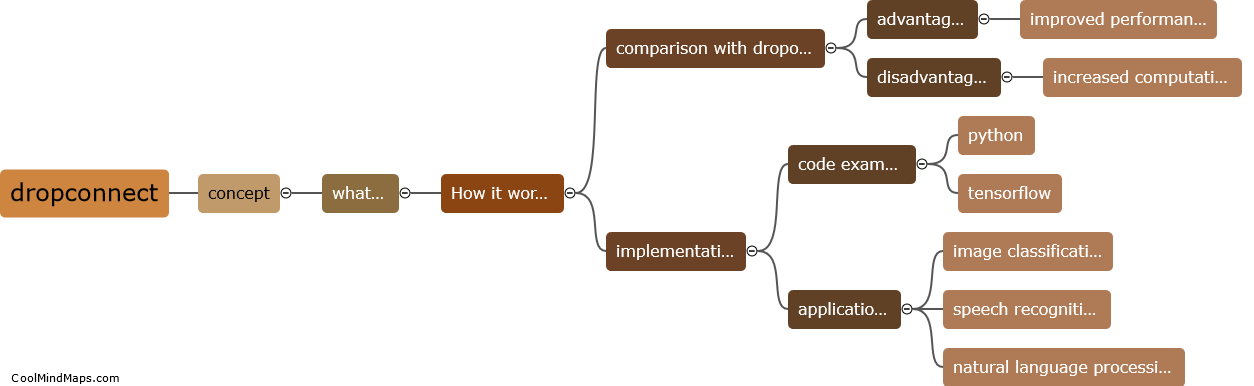

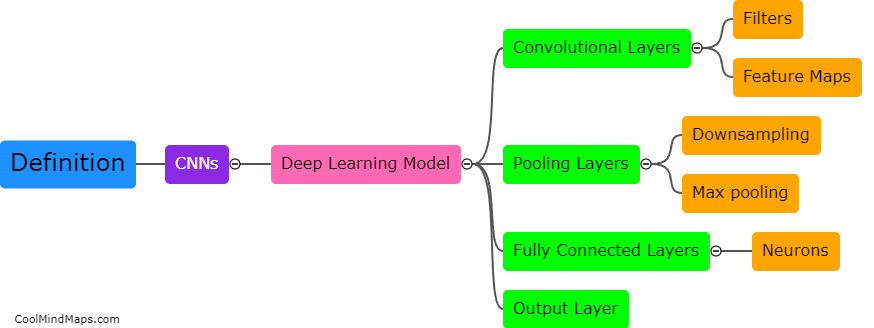

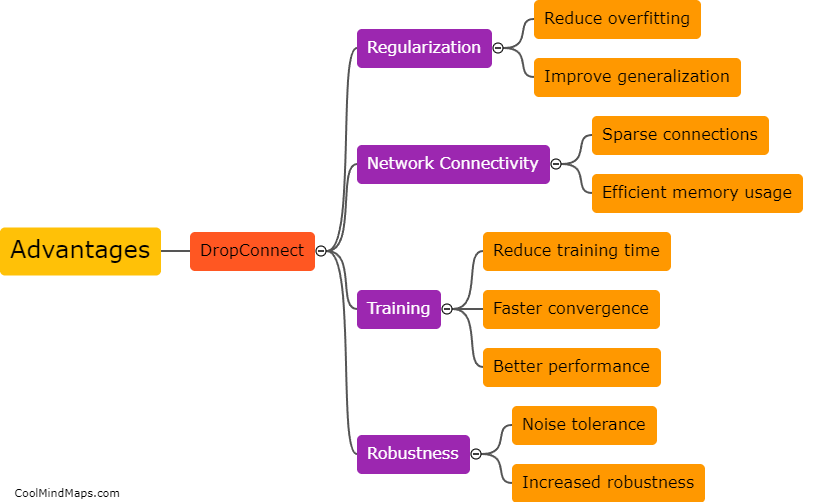

DropConnect is a regularization technique that can be employed in deep learning models to improve their performance. It works similar to dropout, but instead of randomly dropping neurons, it randomly sets the connections between neurons to zero during training. This added randomness helps in preventing overfitting by forcing the network to learn redundant representations that are not reliant on any specific set of inputs. Additionally, DropConnect facilitates increased generalization as it implicitly averages over an exponential number of different architectures while training a single model. It also encourages network efficiency by reducing the parameters and computation required during inference. Overall, the advantages of employing DropConnect within deep learning models include enhanced model capacity, improved generalization, regularization, and computational efficiency.

This mind map was published on 4 September 2023 and has been viewed 94 times.