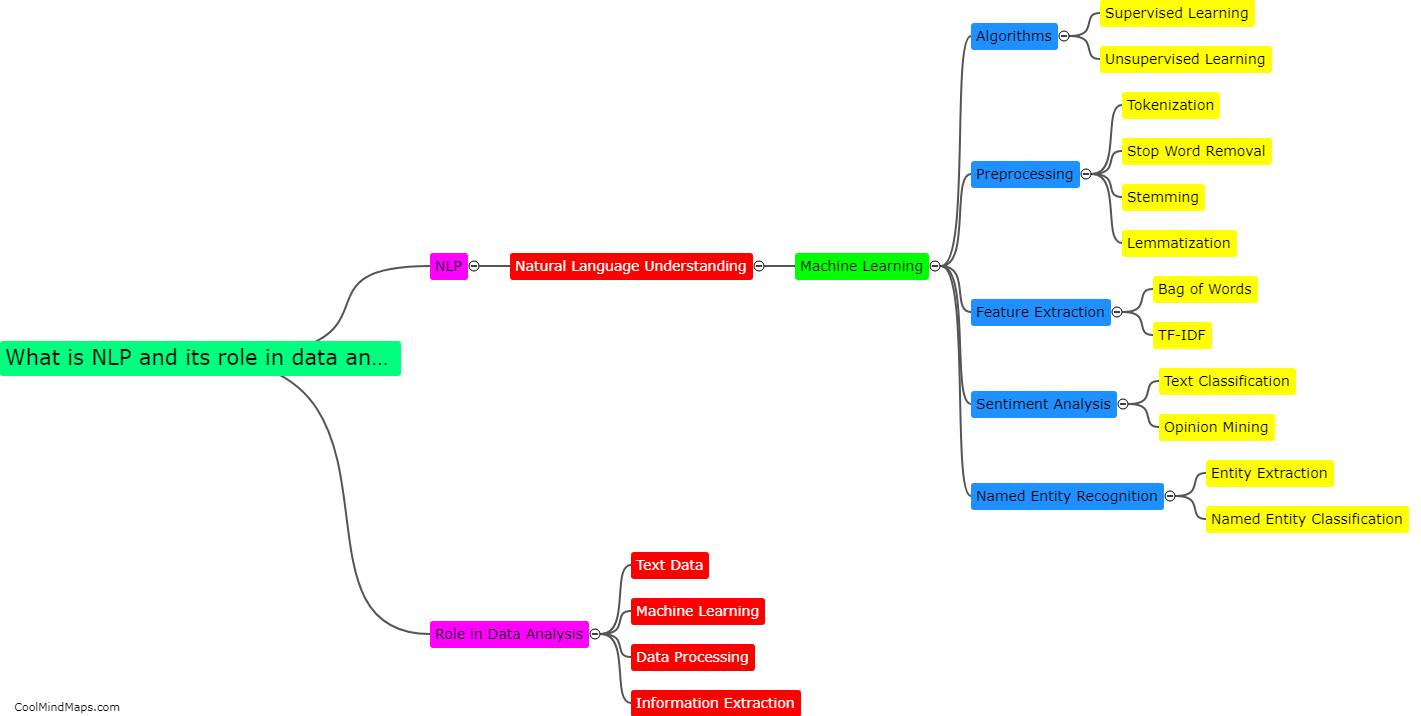

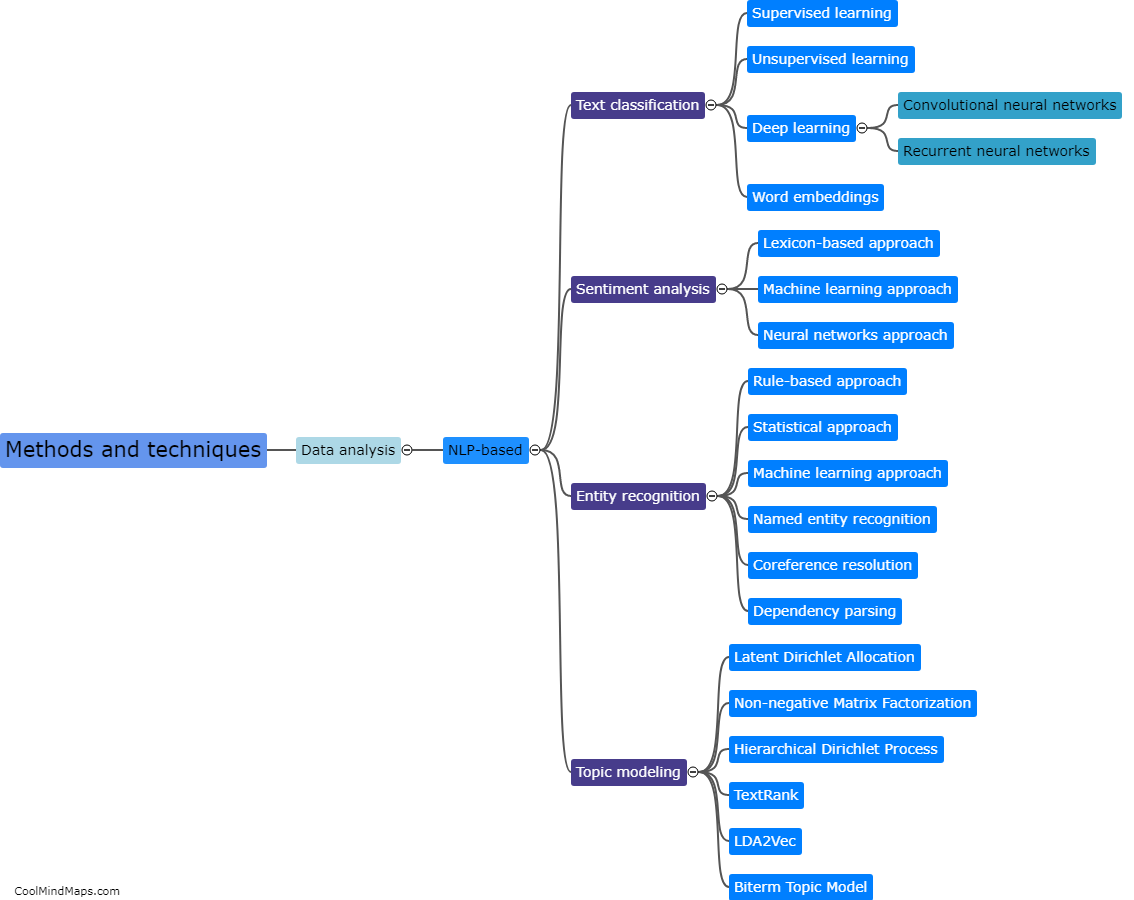

What are the methods and techniques used in NLP-based data analysis?

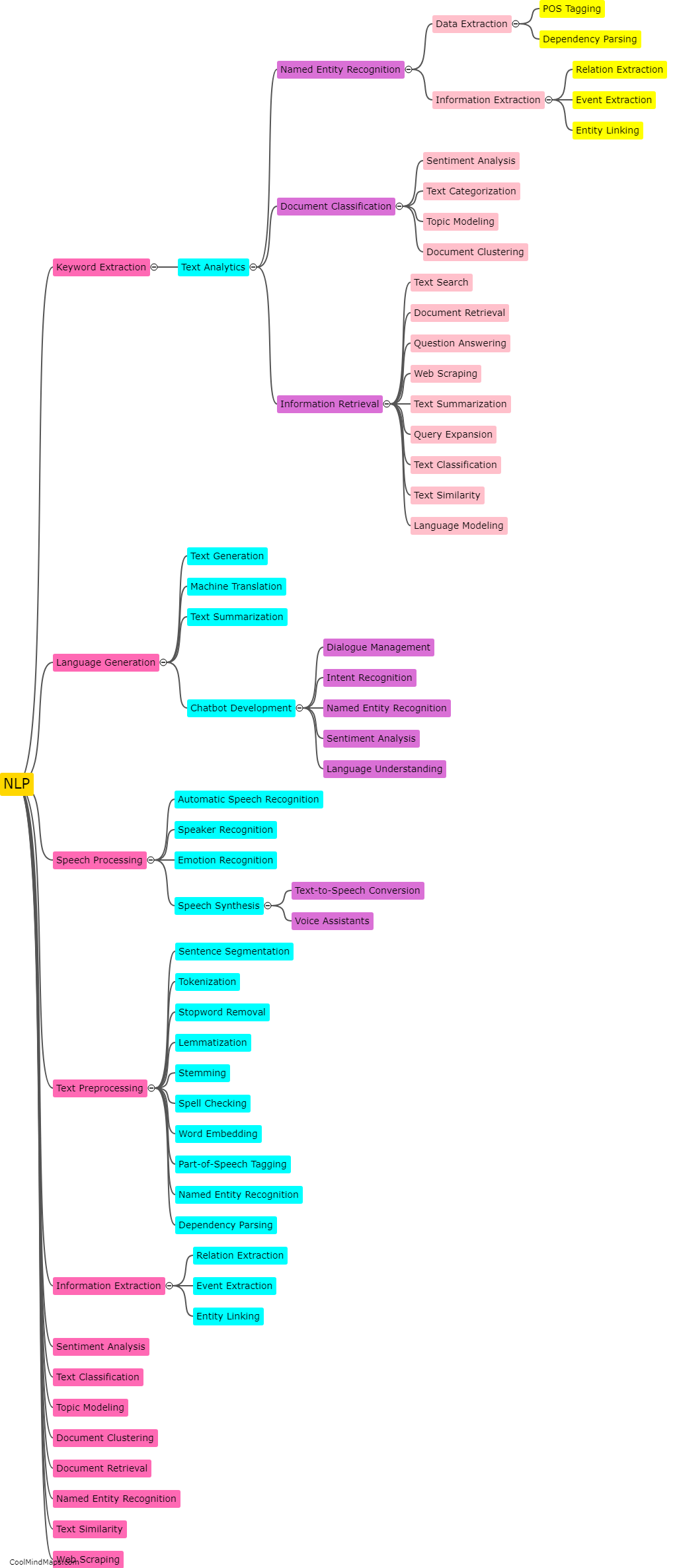

Natural Language Processing (NLP)-based data analysis involves utilizing various methods and techniques to extract meaningful insights from textual data. These include preprocessing techniques such as tokenization, removing stopwords, stemming, and lemmatization to convert sentences into a more structured format. Feature engineering techniques such as bag-of-words, n-grams, and word embeddings are employed for text representation. Machine learning algorithms like Naive Bayes, support vector machines, and neural networks are applied for classification, sentiment analysis, and entity recognition tasks. Additionally, topic modeling techniques like Latent Dirichlet Allocation (LDA) and word2vec are widely used to identify latent topics and infer document similarity. Overall, these methods and techniques play a crucial role in enabling NLP-based data analysis to extract valuable insights and knowledge from textual data.

This mind map was published on 7 November 2023 and has been viewed 99 times.