How does dropconnect differ from dropout in neural networks?

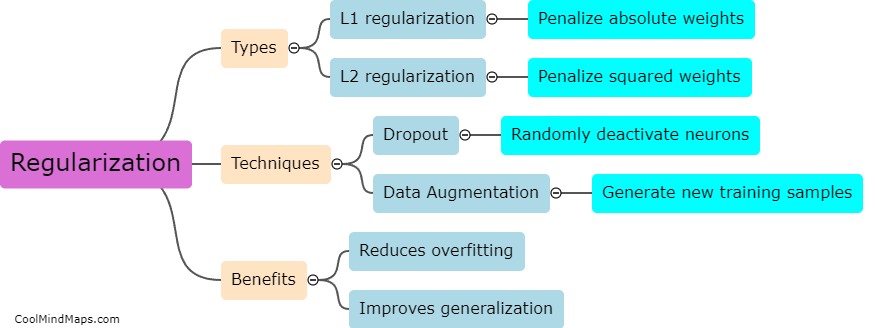

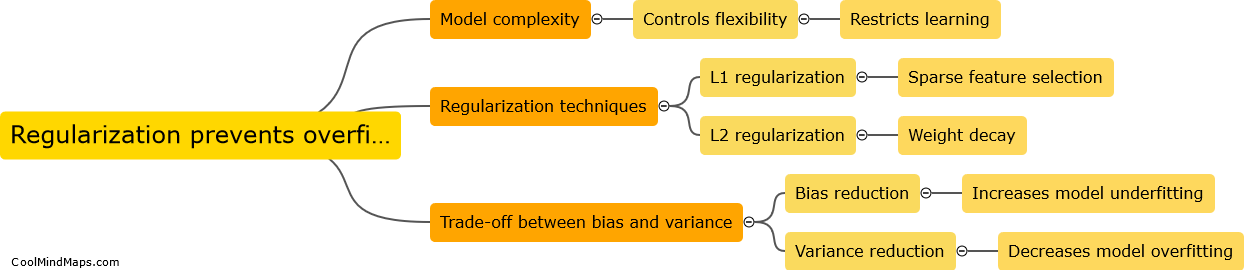

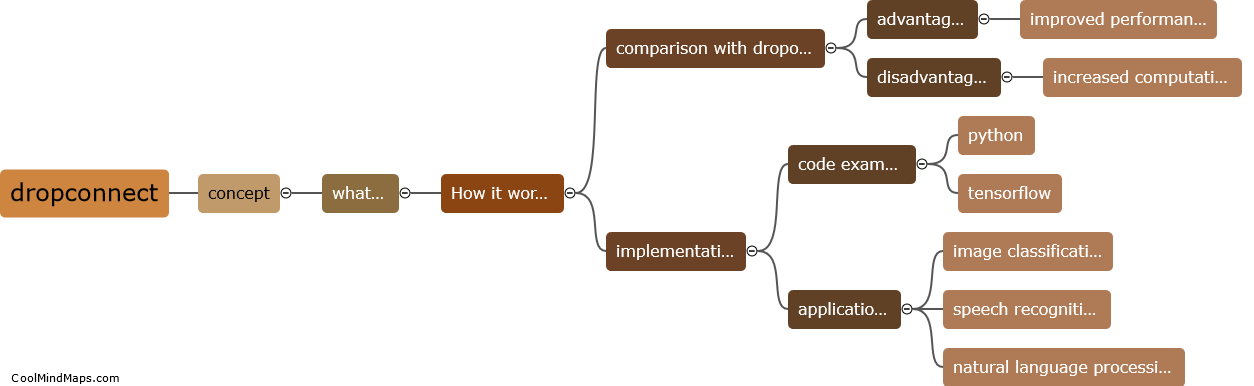

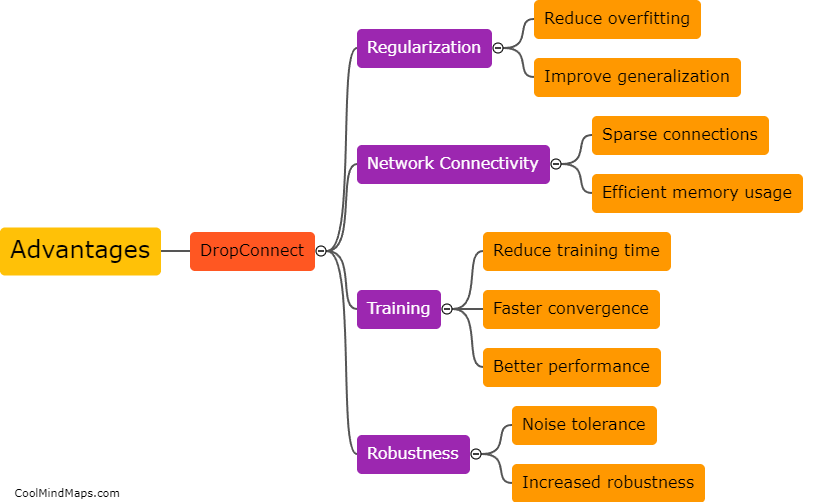

Dropconnect and dropout are regularization techniques used in neural networks to prevent overfitting. Dropout randomly sets a fraction of input units to zero during training, effectively "dropping out" some neurons. This forces the network to learn more robust features and prevents co-adaptation between neurons. On the other hand, dropconnect operates at the weight level, randomly setting a fraction of the weights to zero during training. Unlike dropout, dropconnect randomly masks the weights of connections, rather than the activation of neurons. This means that dropconnect drops entire connections, potentially affecting the accuracy of individual neurons rather than influencing the co-adaptation between them. Overall, dropconnect and dropout have similar goals of regularization, but they differ in the level at which they introduce sparsity in neural networks.

This mind map was published on 4 September 2023 and has been viewed 234 times.