What are the different types of regularization techniques for neural networks?

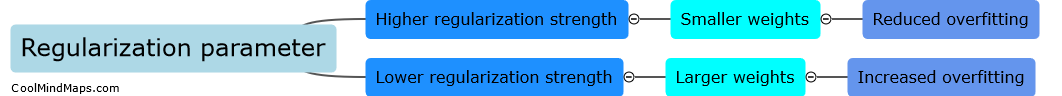

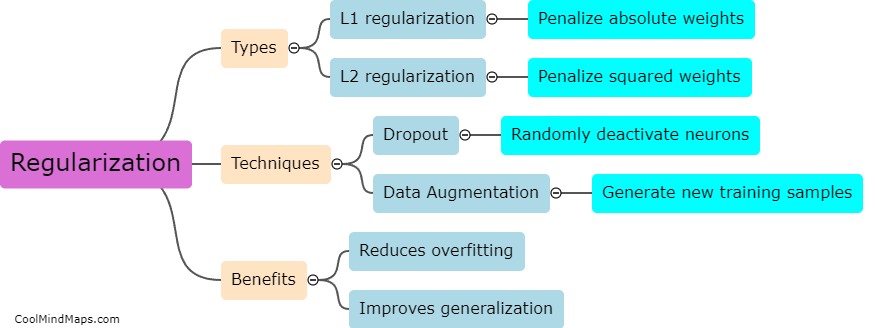

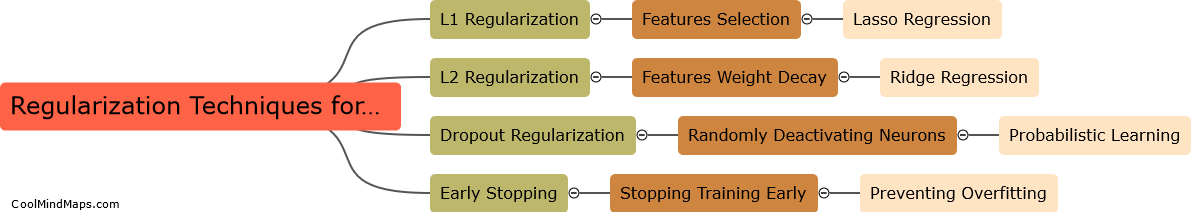

Regularization techniques for neural networks aim to prevent overfitting and improve the generalization ability of the model. There are several types of regularization techniques commonly used in the field. One popular technique is L1 and L2 regularization, which adds a regularization term to the loss function to penalize large weights in the network. L1 regularization encourages sparsity in the weights, leading to a more interpretable and compact model, while L2 regularization promotes weight decay, preventing the weights from growing too large. Another technique is dropout, where a certain percentage of neurons are randomly "dropped out" during training, forcing the network to rely on the remaining neurons and preventing co-adaptation. Another method is early stopping, which stops the training process when the model's performance on a validation set starts to deteriorate, preventing overfitting. Finally, data augmentation is a technique where the training data is artificially expanded by applying random transformations to the input, such as rotations, translations, or flips, thereby increasing the size and diversity of the dataset. Overall, these regularization techniques help to improve the generalization ability of neural networks and reduce overfitting.

This mind map was published on 20 August 2023 and has been viewed 109 times.