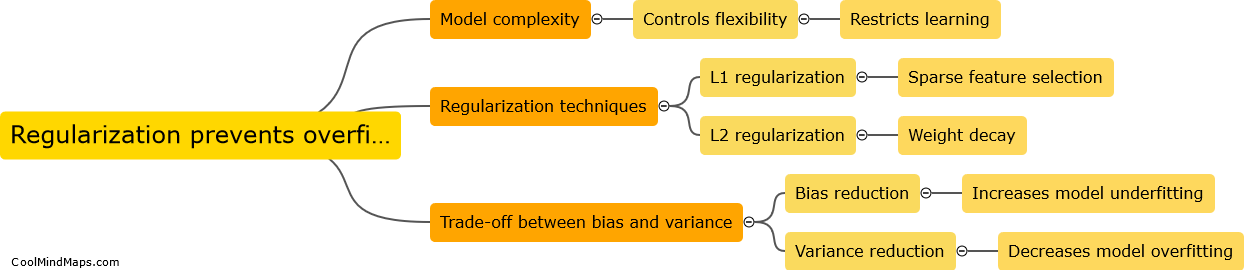

How does regularization prevent overfitting in neural networks?

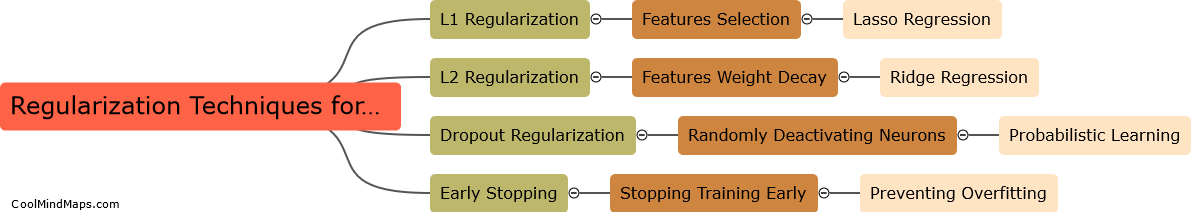

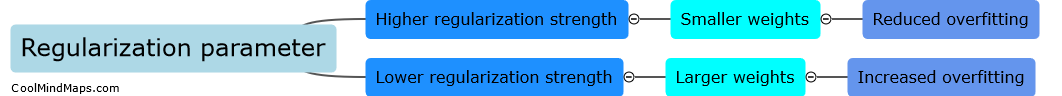

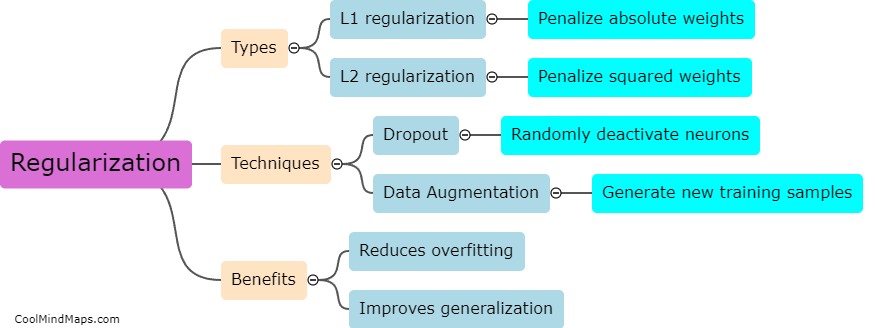

Regularization is a technique used in neural networks to prevent overfitting, a common problem in machine learning where models memorize the training data instead of learning general patterns. Overfitting occurs when a model becomes too complex and starts capturing noise or irrelevant features. Regularization introduces a penalty term to the loss function that encourages the neural network to minimize the complexity of the learned representation. This is often done by adding a regularization term such as L1 or L2 regularization to the loss function, which penalizes large weights or complex representations. By adding this penalty, regularization discourages the model from fitting the noise in the training data, leading to a more generalized and robust model that performs well on unseen data.

This mind map was published on 20 August 2023 and has been viewed 104 times.