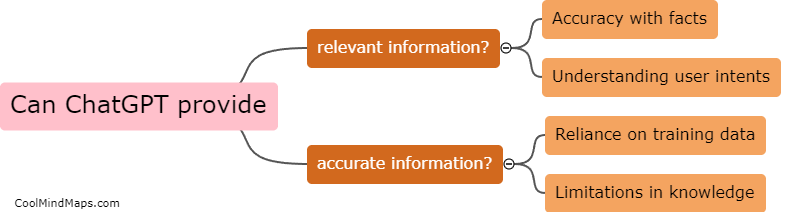

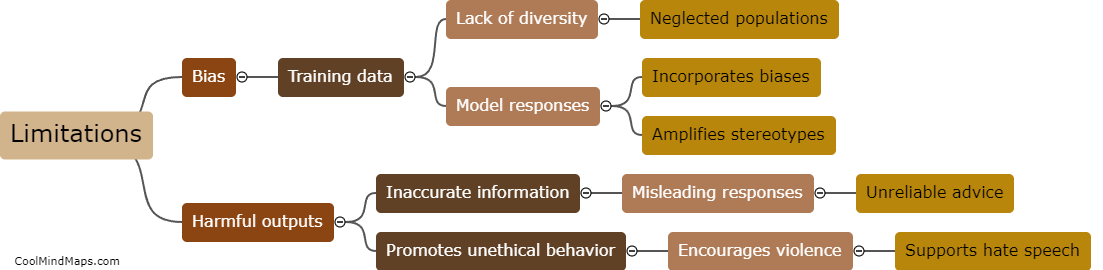

What are the limitations of ChatGPT in terms of bias and harmful outputs?

ChatGPT, like any other AI model, has limitations when it comes to bias and harmful outputs. Firstly, it tends to replicate the biases present in the training data, which can reflect societal prejudices and unfairness. This can result in biased or discriminatory responses that perpetuate stereotypes and inequality. Additionally, it may provide false or misleading information, especially when dealing with controversial or sensitive topics. It lacks a reliable fact-checking mechanism, making it susceptible to spreading misinformation. Furthermore, ChatGPT can generate harmful or offensive content, including hate speech or explicit text, based on user interactions. Despite efforts to mitigate these issues, the risk of biased and harmful outputs remains a challenge that needs continuous monitoring and refinement in order to ensure ethical and responsible usage.

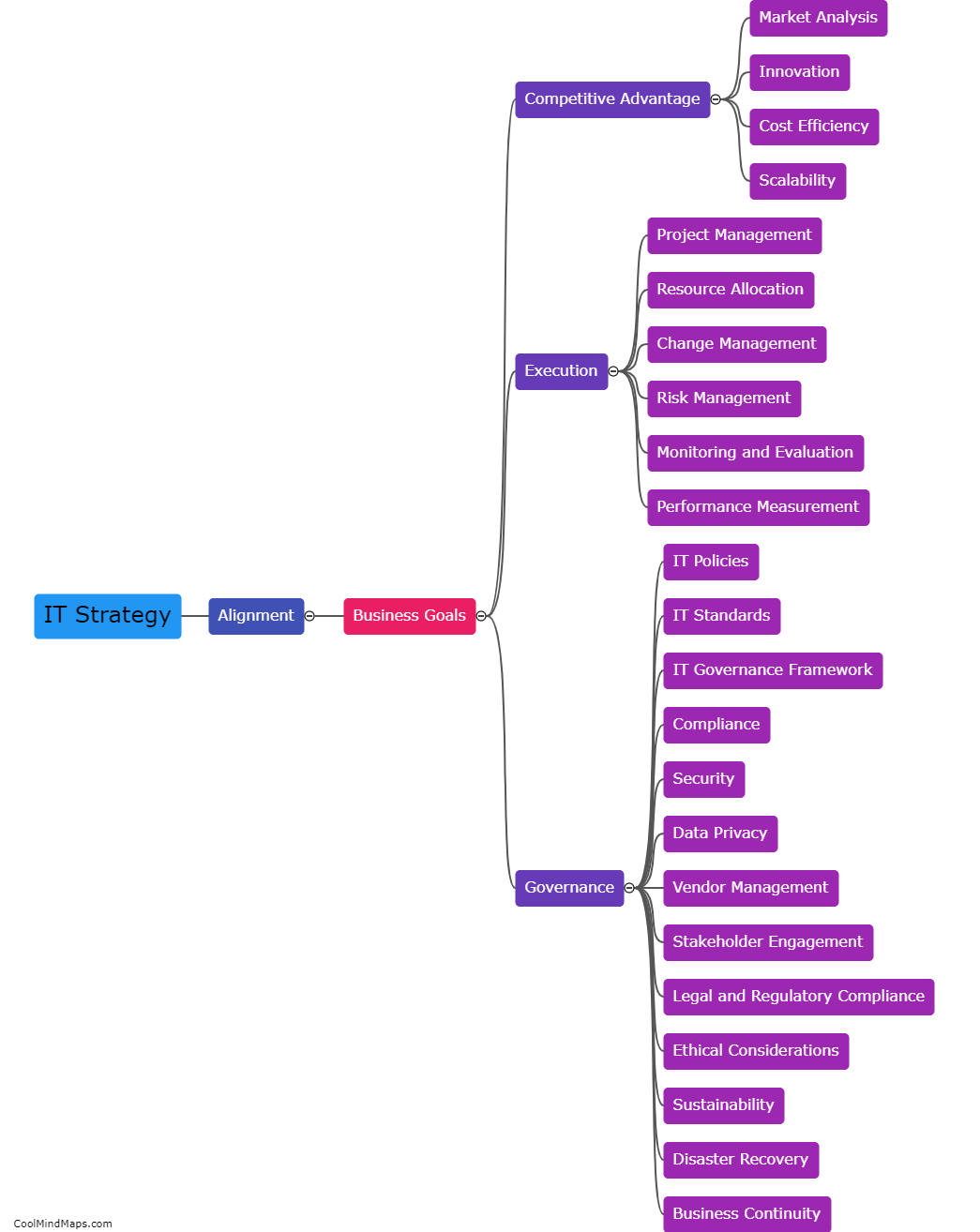

This mind map was published on 5 December 2023 and has been viewed 77 times.