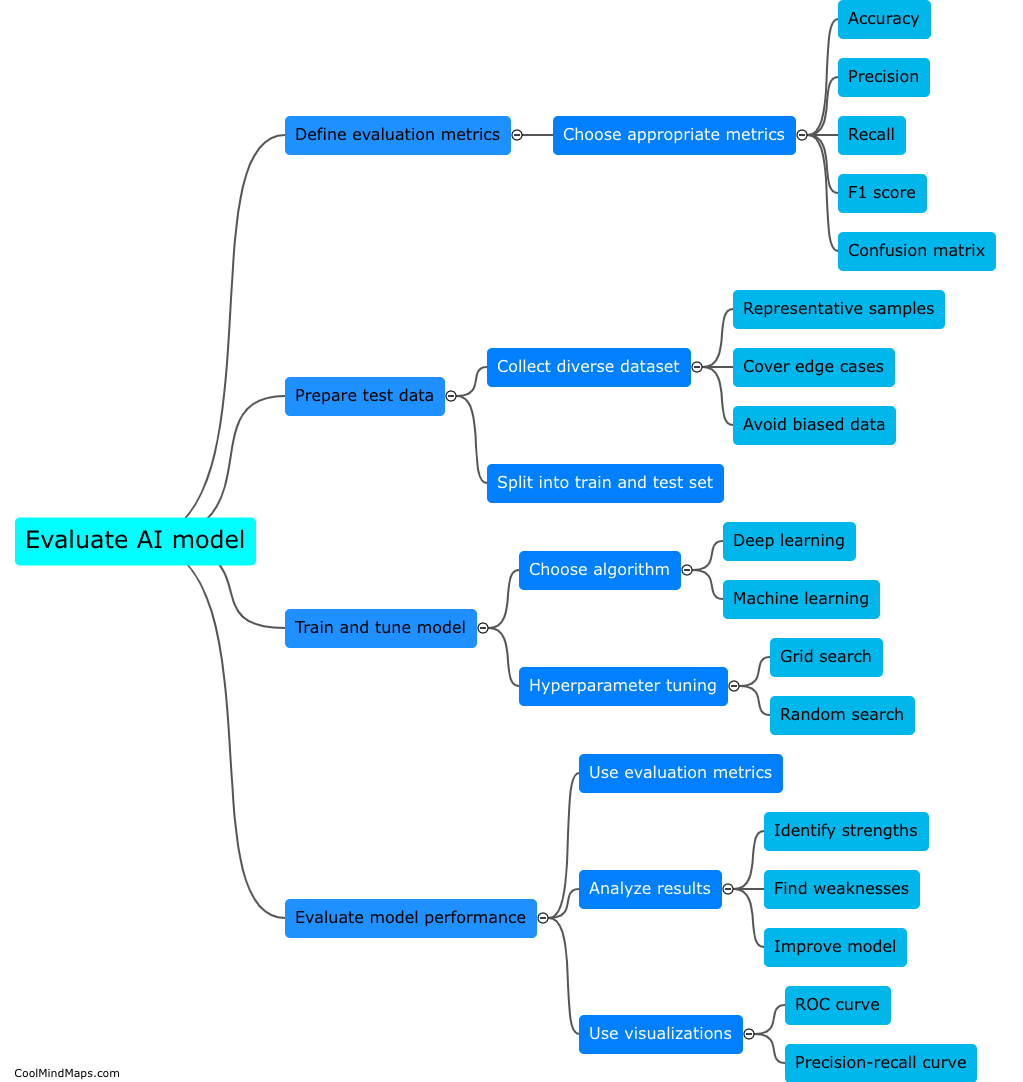

How to evaluate an AI model?

Evaluating an AI model is crucial to ensure its accuracy, reliability and robustness. One way to evaluate an AI model is by measuring its performance on a testing dataset, which is separate from the training dataset used to develop the model. The performance can be measured using metrics such as accuracy, precision, recall, and F1 score, depending on the nature of the problem being solved. It is also important to ensure that the model is not overfitting or underfitting the data, and to analyze the model's predictions to understand its strengths and weaknesses. Additionally, it is essential to keep updating and refining the model as new data becomes available to ensure that it continues to perform well.

This mind map was published on 24 May 2023 and has been viewed 105 times.