What are the limitations of dropconnect in neural networks?

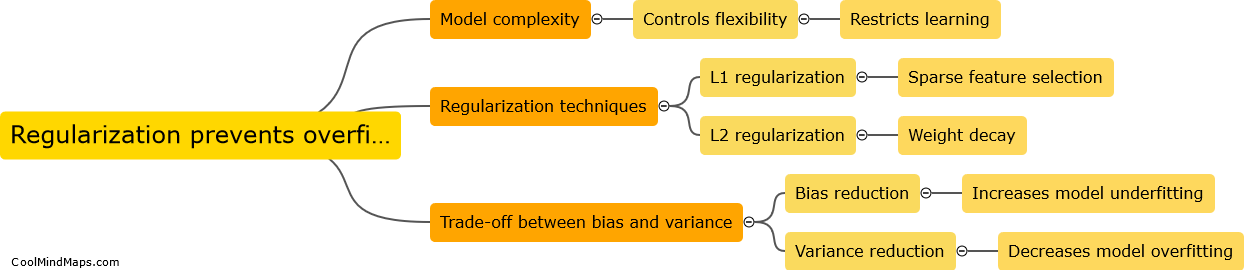

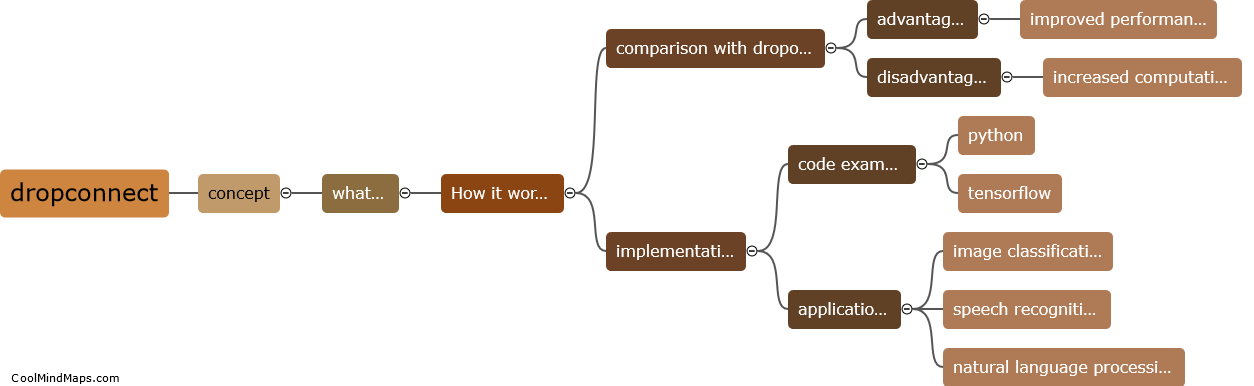

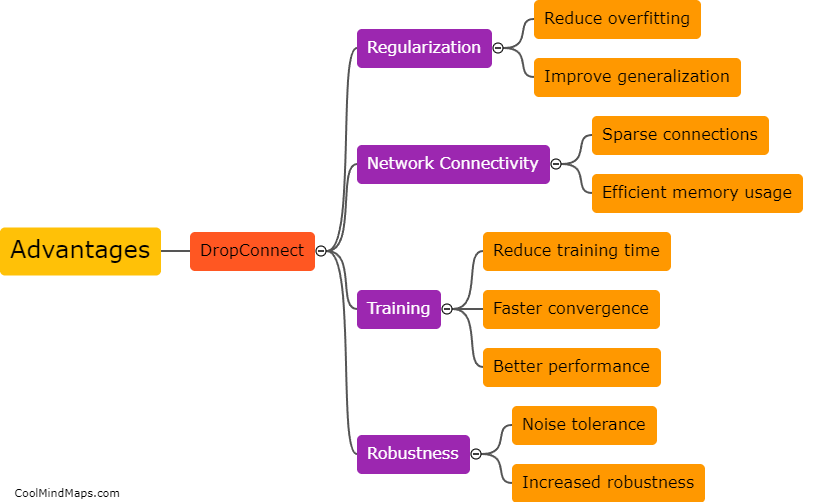

DropConnect is a regularization technique used in neural networks where individual weights within a layer are randomly set to zero during the training phase. While dropconnect has been successful in improving the generalization and performance of neural networks, it does have some limitations. One limitation is that dropconnect does not fully address the issue of overfitting, which occurs when a model becomes too specialized to the training data and performs poorly on unseen data. Additionally, dropconnect may lead to a decrease in overall network capacity as a result of randomly setting connections to zero, potentially limiting the network's ability to learn complex patterns. Furthermore, dropconnect can be computationally expensive due to the increased number of parameters and connections that need to be trained and evaluated, which may limit its practical implementation on larger neural networks.

This mind map was published on 4 September 2023 and has been viewed 98 times.