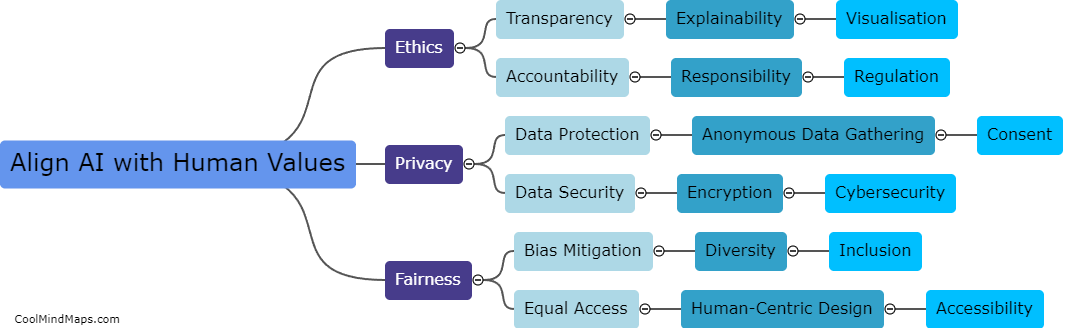

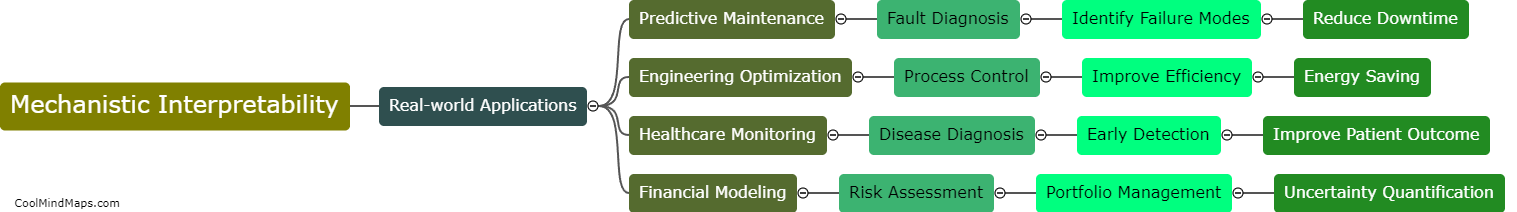

How can we achieve mechanistic interpretability?

Mechanistic interpretability refers to the ability to understand the underlying mechanisms that govern the behavior of a system or process. In order to achieve mechanistic interpretability, it is necessary to identify the key variables and their relationships, and to develop a mathematical model that accurately describes the system. This can be achieved through a combination of experimental data, theoretical analysis, and computational modeling. It is important to validate and test these models with relevant experiments to ensure their accuracy. Once a mechanistic model has been developed, it can be used to predict the behavior of the system under different conditions, and to design interventions to modify or control its behavior. Achieving mechanistic interpretability is crucial for a wide range of fields including biology, medicine, and engineering, where understanding the underlying mechanisms is essential for developing effective therapies and technologies.

This mind map was published on 22 May 2023 and has been viewed 100 times.