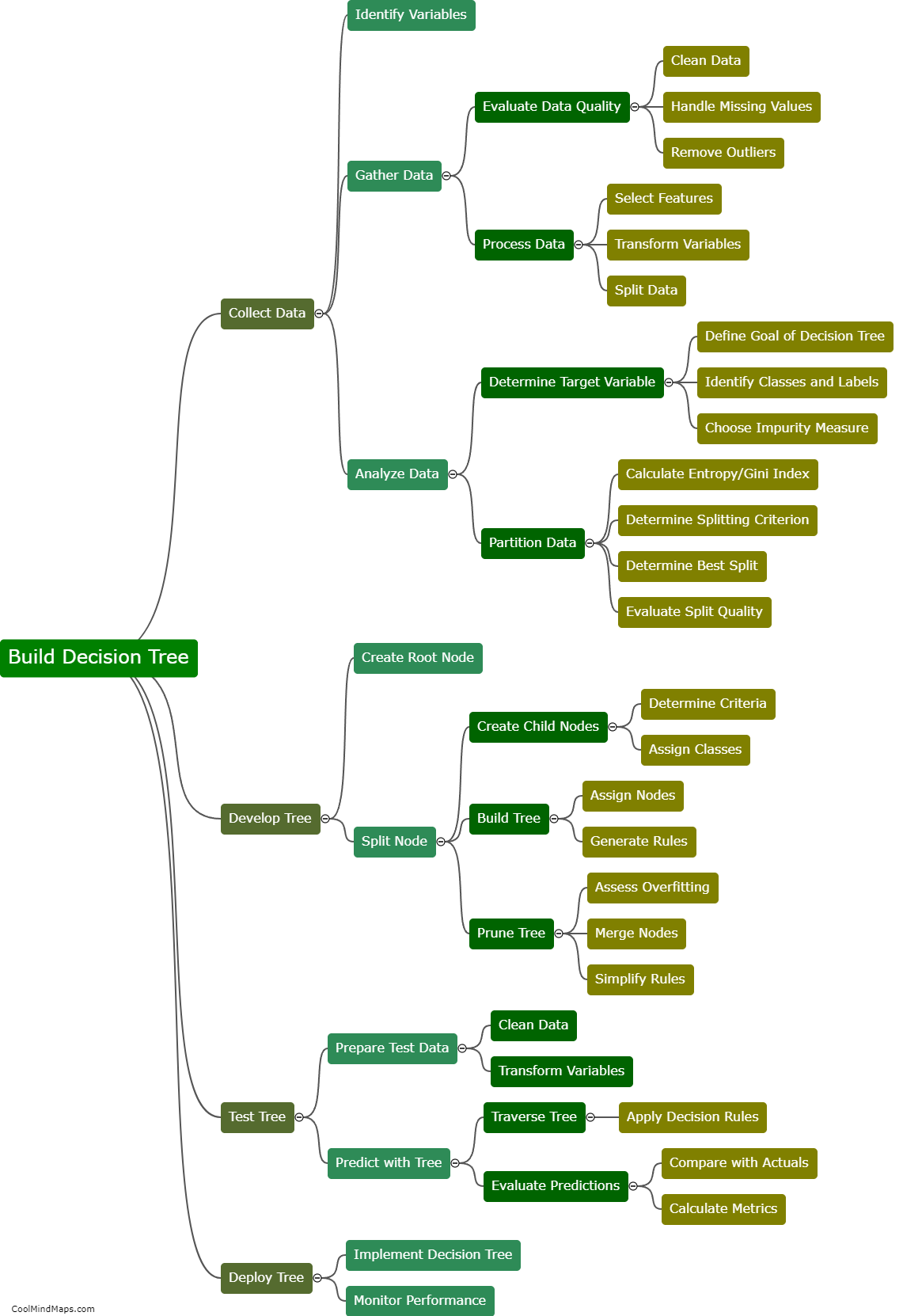

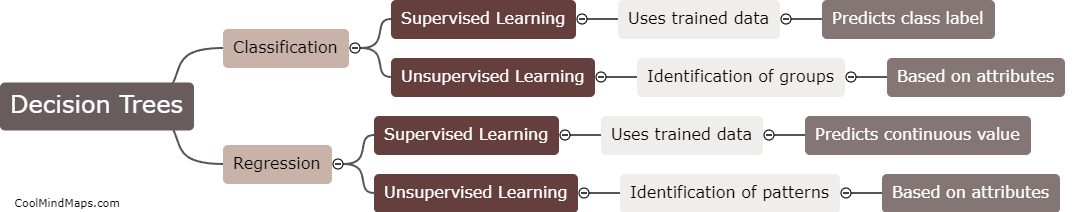

How can decision trees be used for classification and regression?

Decision trees can be used for both classification and regression tasks. In classification, decision trees partition the feature space into distinct regions, assigning each observation to a specific class. This is achieved by recursively splitting the data based on the values of different features, optimizing for certain criteria such as maximizing information gain or minimizing impurities. On the other hand, decision trees can also be used for regression by predicting a continuous target variable instead of assigning class labels. The tree structure allows for the creation of rules or conditions to predict numeric values based on feature values. In this case, each leaf node represents a predicted value, obtained by considering the average or majority value of the training instances in that region. Decision trees, therefore, offer a versatile and intuitive approach for both classification and regression tasks.

This mind map was published on 20 December 2023 and has been viewed 84 times.