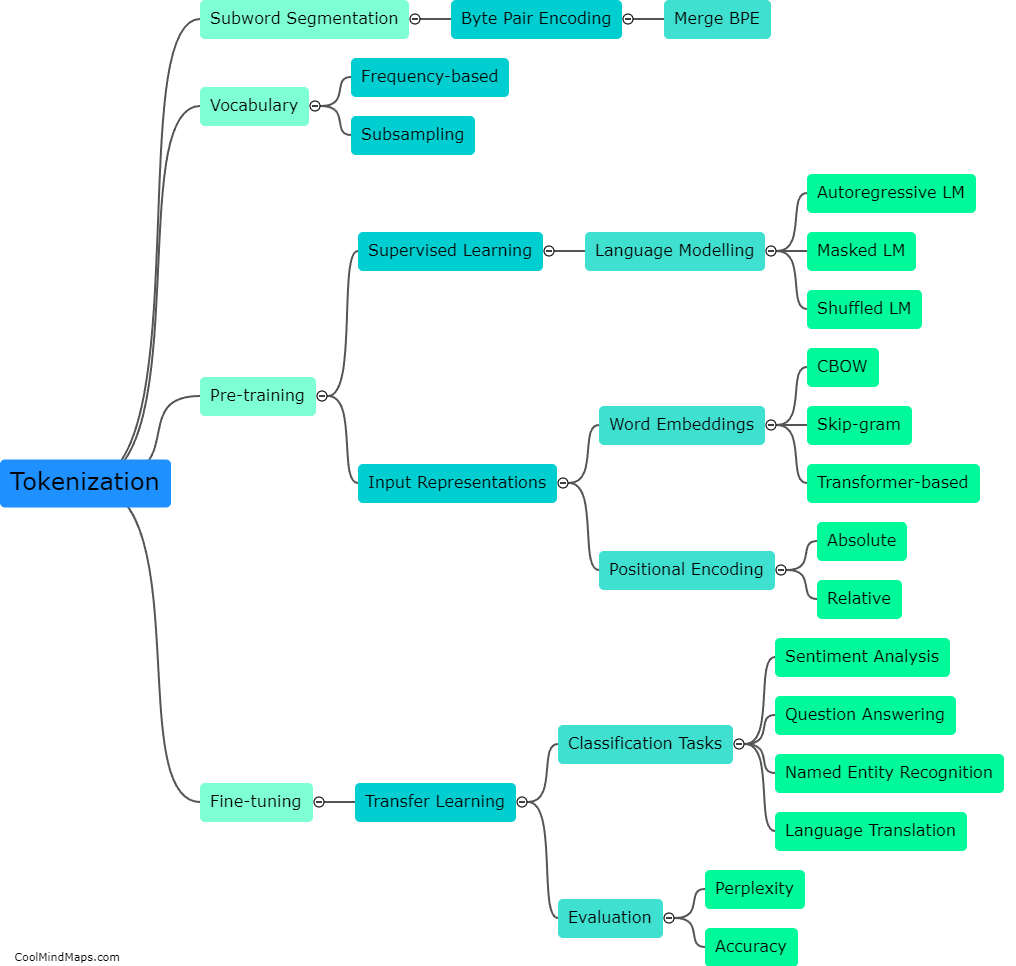

How is a Large Language Model trained?

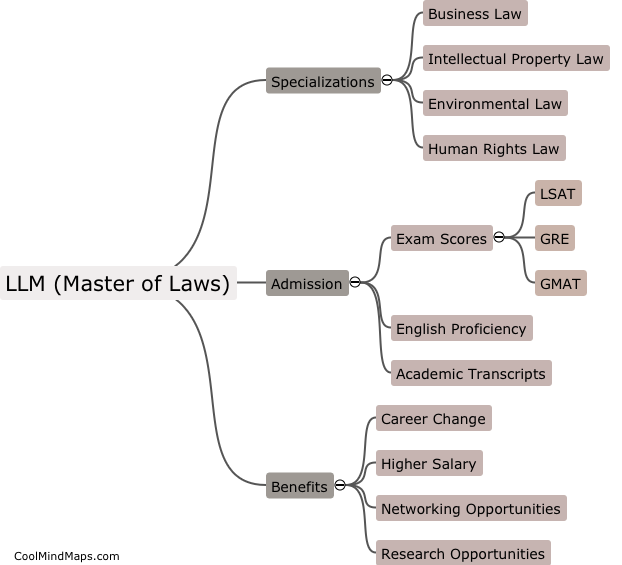

A Large Language Model (LLM) is a type of machine learning algorithm that uses vast amounts of data to improve its language understanding and predictive abilities. To train an LLM, the model requires access to enormous datasets of text data, such as books, articles, and web pages. The model is then trained using a process of unsupervised learning, where it learns to identify patterns and structures within the data without the need for explicit labeling or annotation. Additionally, the model undergoes fine-tuning, where it is adjusted to fit specific tasks, such as translation or text classification. LLMs are trained using powerful computer hardware, such as GPUs, and can take several days or even weeks to complete their training.

This mind map was published on 17 May 2023 and has been viewed 127 times.