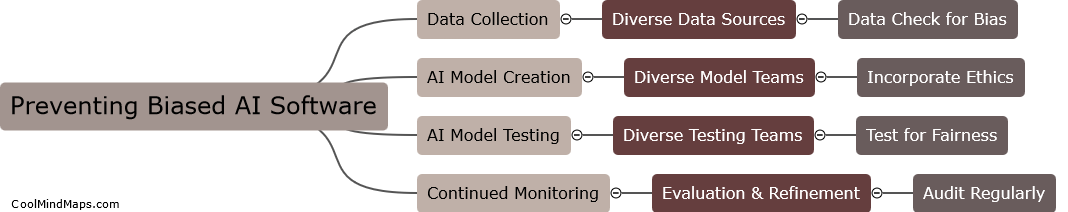

How can biased AI software be prevented?

Biased AI software can be prevented by adopting best practices in AI model development. This includes selecting diverse, representative data sets that accurately capture the various aspects of the problem being addressed, being transparent about data sources and methods used in the selection process. Moreover, ensuring that algorithmic outputs do not reflect discriminatory patterns or promote the propagation of biases. Testing and validation mechanisms can also be useful in detecting and mitigating biases in AI models. Once identified, developers must take care to address them through interventions such as retraining, refining or adjusting the application appropriately. Ultimately, achieving unbiased AI requires ongoing awareness, sensitivity, and prioritization of model transparency and ethical considerations.

This mind map was published on 15 June 2023 and has been viewed 121 times.