How can transparency and accountability be ensured in AI development?

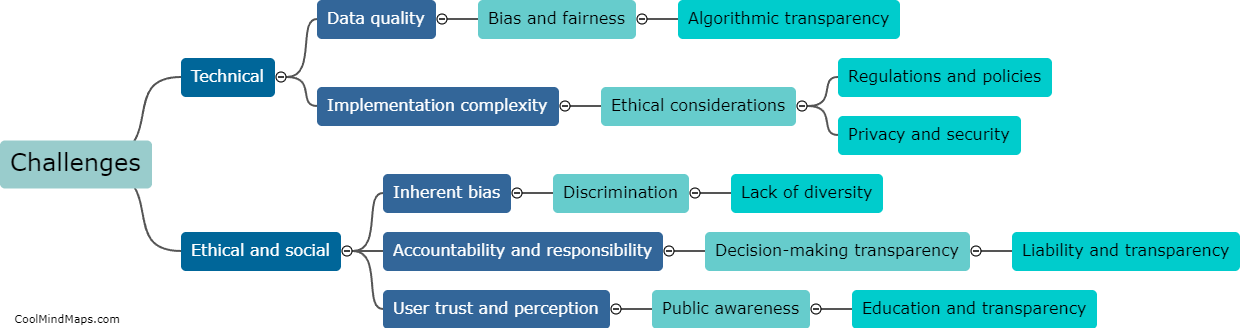

Transparency and accountability are crucial aspects in the development and implementation of artificial intelligence (AI) systems. To ensure transparency, developers should openly share information regarding the AI's goals, capabilities, and limitations. This includes providing clear explanations of the algorithms used, data sources, and potential biases that may exist. Accountability, on the other hand, can be ensured by establishing regulatory frameworks and guidelines that govern the development and deployment of AI. Moreover, conducting regular audits and assessments are important to evaluate the AI system's performance and its compliance with ethical standards. Additionally, involving diverse stakeholders, including experts from various fields and community representatives, in decision-making processes can foster accountability and reduce the risks of bias and discrimination. Overall, a comprehensive approach integrating transparency, accountability, and stakeholder engagement is key to promote responsible and trustworthy AI development.

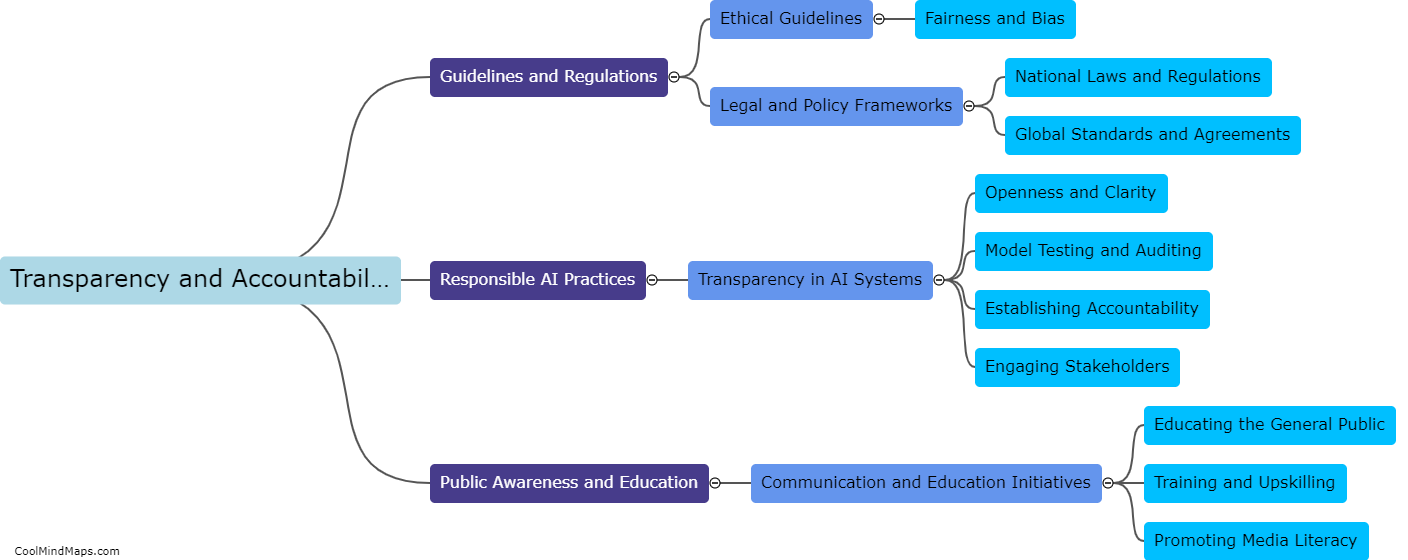

This mind map was published on 15 October 2023 and has been viewed 109 times.