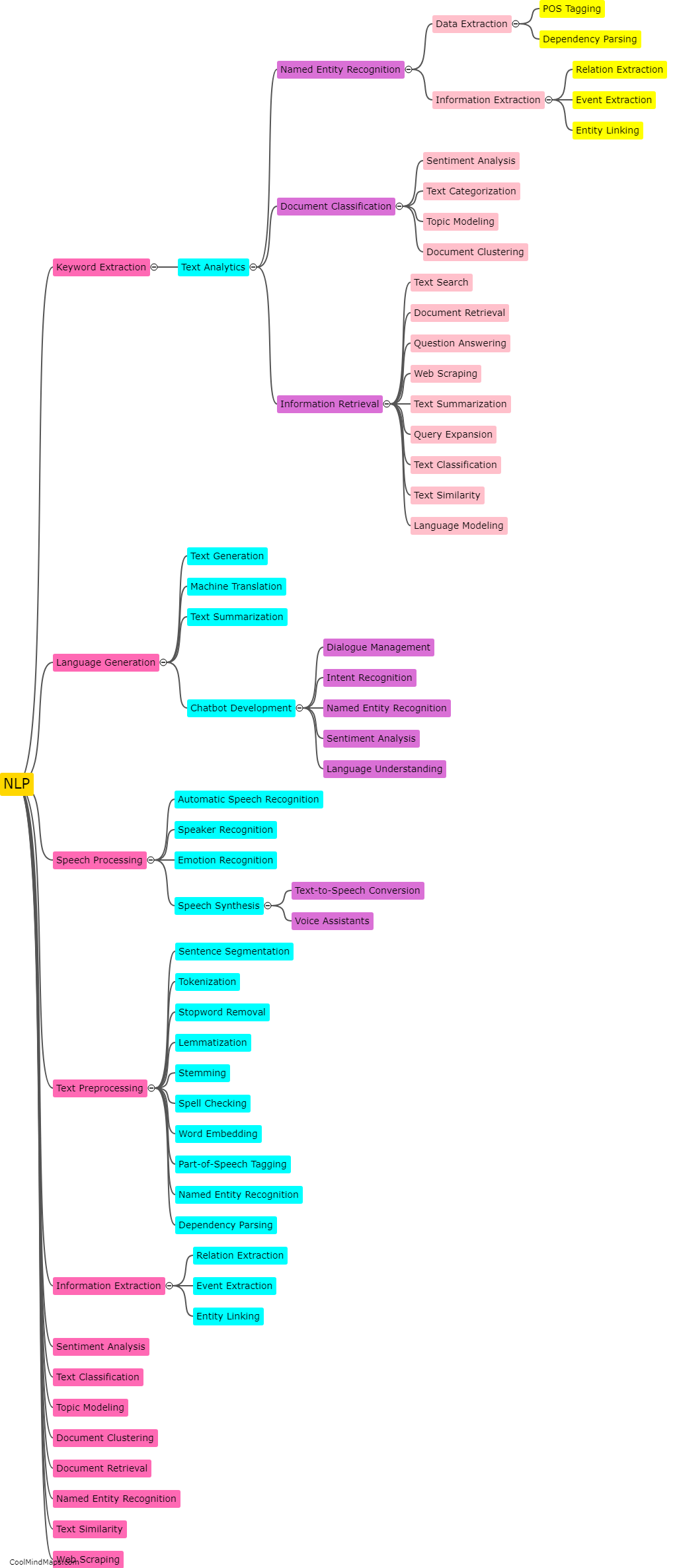

What are the challenges and limitations of using NLP in data analysis?

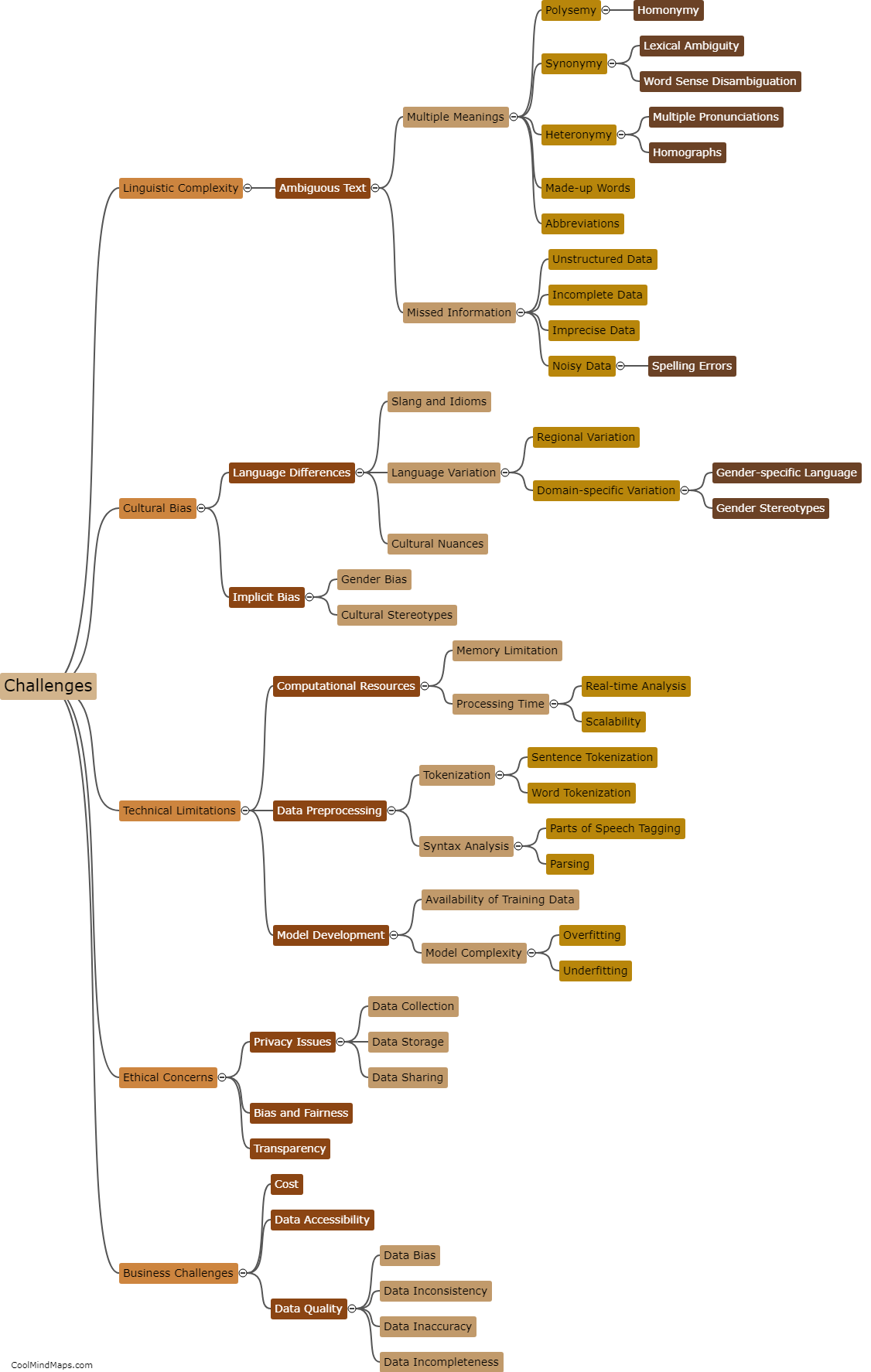

Natural Language Processing (NLP) has emerged as a powerful tool for data analysis, but it also comes with significant challenges and limitations. Firstly, the accuracy and reliability of NLP algorithms heavily rely on the quality and diversity of training data. If the training data is biased or limited, it can result in biased or inaccurate results. Additionally, NLP struggles with understanding context, sarcasm, irony, or ambiguity in language, leading to potential misinterpretations. Another limitation is the requirement of large computational resources and processing time, making it challenging for real-time analysis or applications with stringent time constraints. Furthermore, privacy concerns arise as NLP may require access to personal or sensitive information, raising ethical and legal implications. Overall, while NLP offers immense potential in data analysis, these challenges must be addressed to ensure accurate and unbiased results.

This mind map was published on 7 November 2023 and has been viewed 89 times.