What is the purpose of neural network regularization?

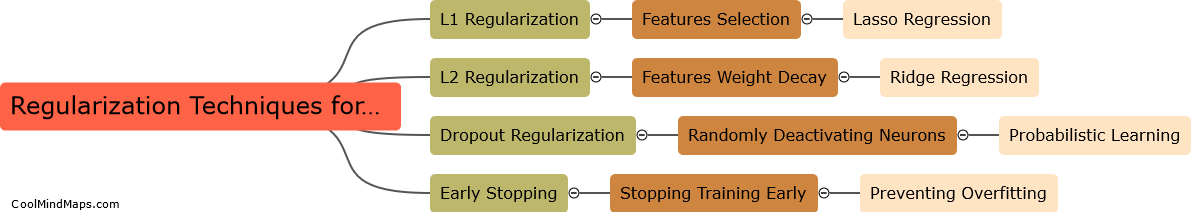

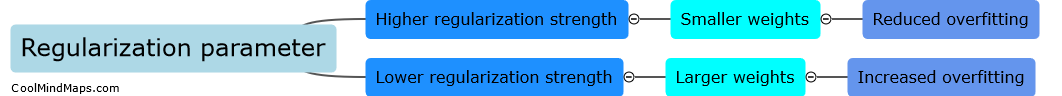

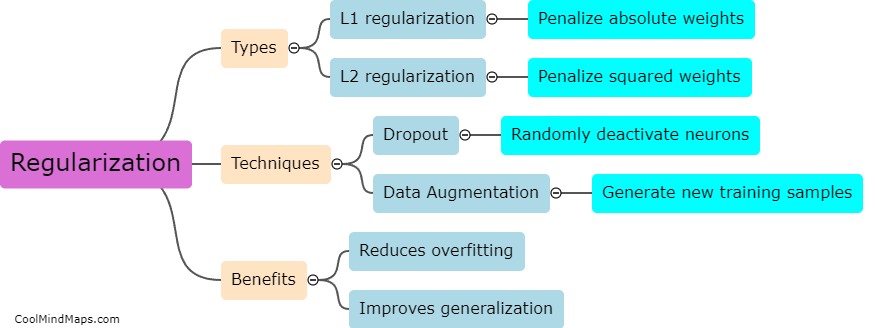

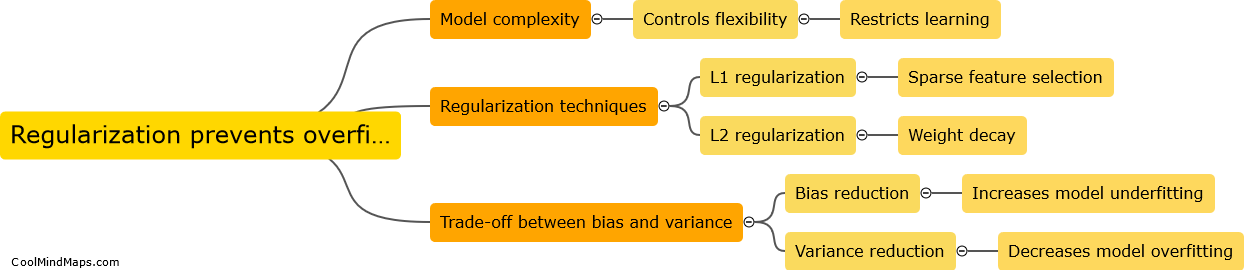

The purpose of neural network regularization is to prevent overfitting and improve the generalization ability of the neural network model. Overfitting occurs when a model learns too much from the training data and fails to perform well on unseen data. Regularization techniques add a penalty term to the loss function during training, which helps in controlling the complexity of the network. This penalty term discourages the neural network from assigning excessively high weights to certain features or connections, reducing the model's sensitivity to noise and irrelevant features. Regularization methods, such as L1 and L2 regularization, dropout, and early stopping, provide a balanced trade-off between fitting the training data and avoiding overfitting, resulting in more robust and reliable neural network models.

This mind map was published on 4 September 2023 and has been viewed 94 times.