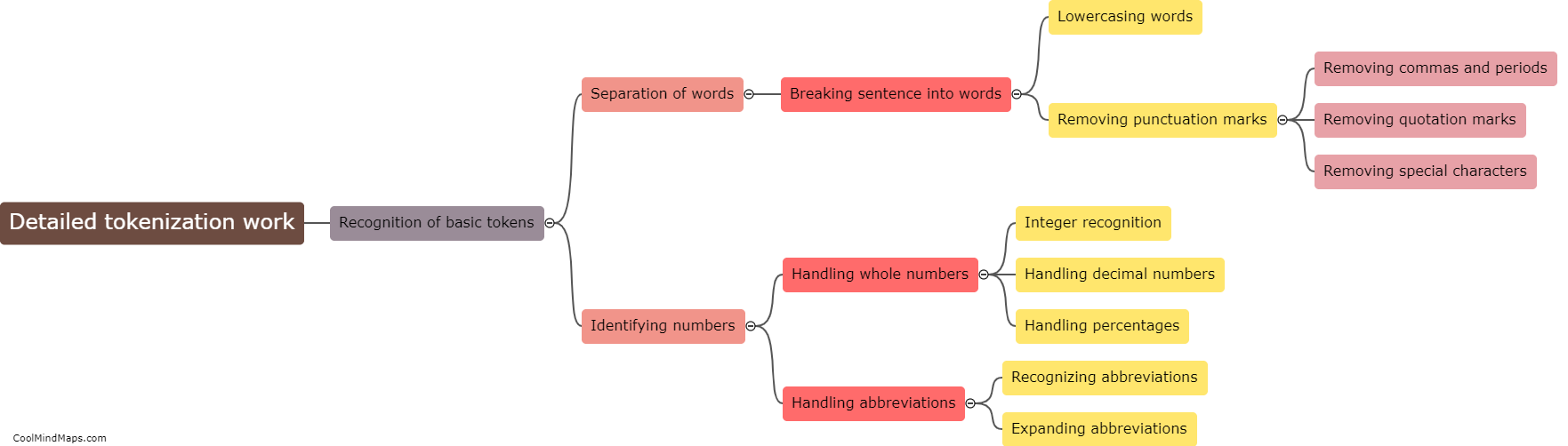

What is tokenization?

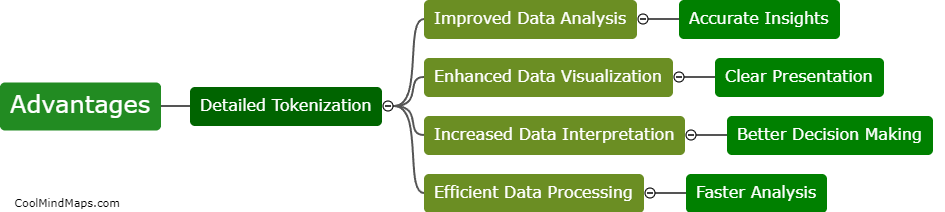

Tokenization is the process of breaking down text into smaller units, known as tokens. These tokens can be individual words or even characters, depending on the level of granularity desired. Tokenization is an essential step in natural language processing and computational linguistics as it helps in various tasks like text classification, sentiment analysis, and information retrieval. By dividing the text into tokens, it becomes easier to analyze and process the information, allowing further computations and analysis. Tokenization is a fundamental technique used in many language-related applications, making it an integral part of text processing pipelines.

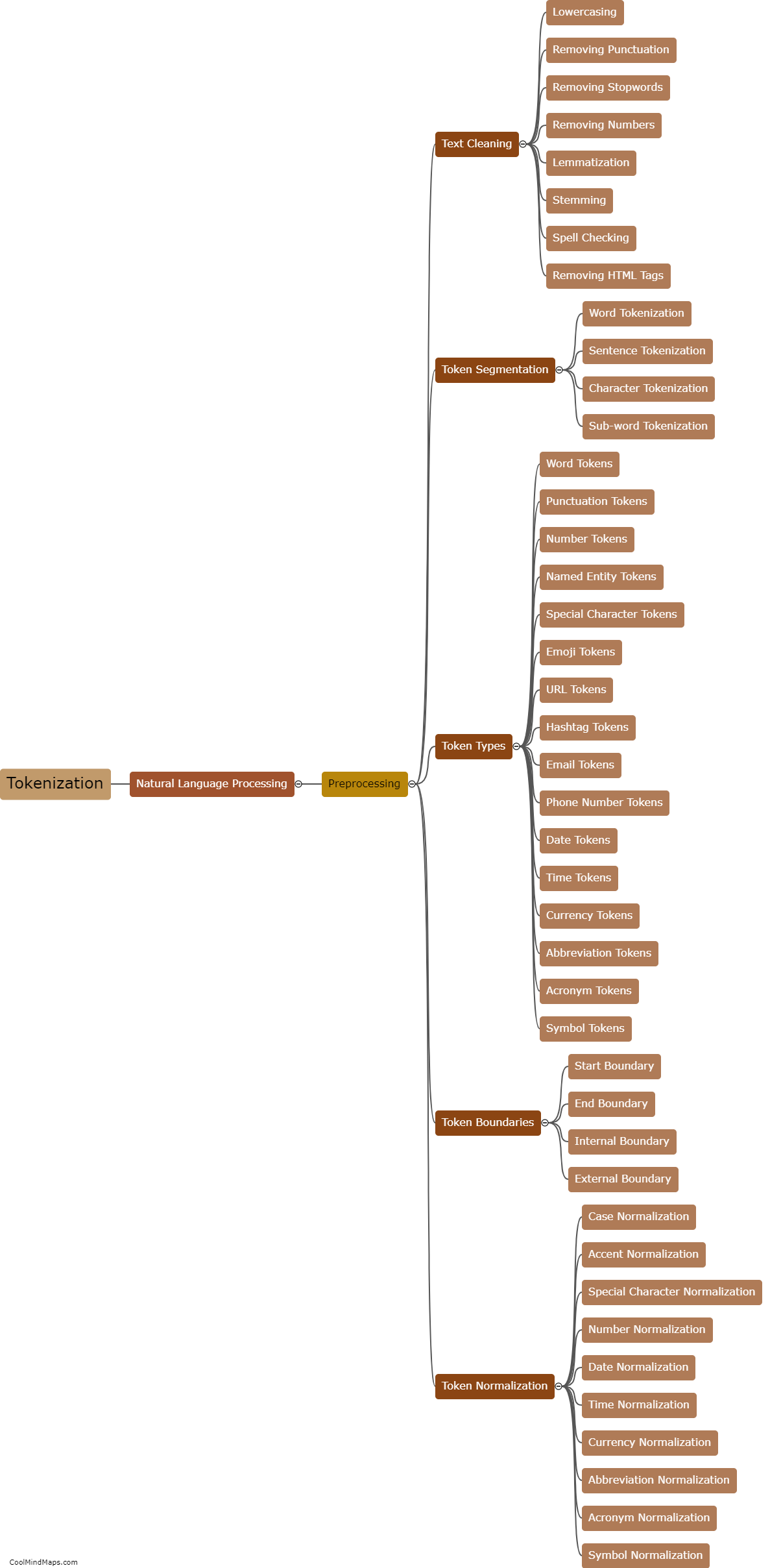

This mind map was published on 2 August 2023 and has been viewed 107 times.