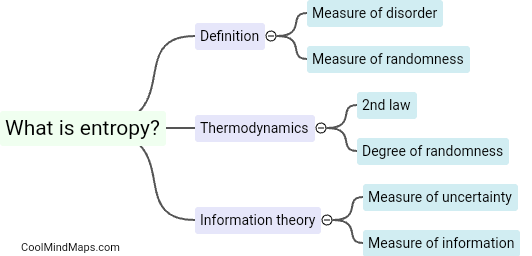

What is entropy?

Entropy is a concept in thermodynamics that measures the level of disorder or randomness in a system. It is often described as the amount of energy that is not available to do work. As a system progresses towards equilibrium, its entropy increases, as the particles within the system become more randomly arranged. In simple terms, entropy can be thought of as a measure of the level of chaos or disorder in a system.

This mind map was published on 3 August 2024 and has been viewed 57 times.