What are the steps to normalize research data?

The process of normalizing research data involves several steps to ensure consistency, accuracy, and reliability. The first step is to clean the data by identifying and removing any duplicates, outliers, or errors. This helps to eliminate any potential biases and inconsistencies in the dataset. The next step is to standardize the data by converting different units of measurement into a common scale. This allows for easy comparison and analysis across variables. The third step is transforming the data, if needed, to meet the assumptions of statistical analyses. This may involve logarithmic, exponential, or other mathematical transformations to achieve normality and homogeneity of variance. Finally, the data can be validated by conducting statistical tests and procedures to confirm the accuracy and reliability of the normalized dataset. This involves checking for normal distribution, testing for significance, and ensuring the validity of statistical models. By following these steps, researchers can ensure that their data is well-prepared for analysis and interpretation, ultimately leading to reliable research findings.

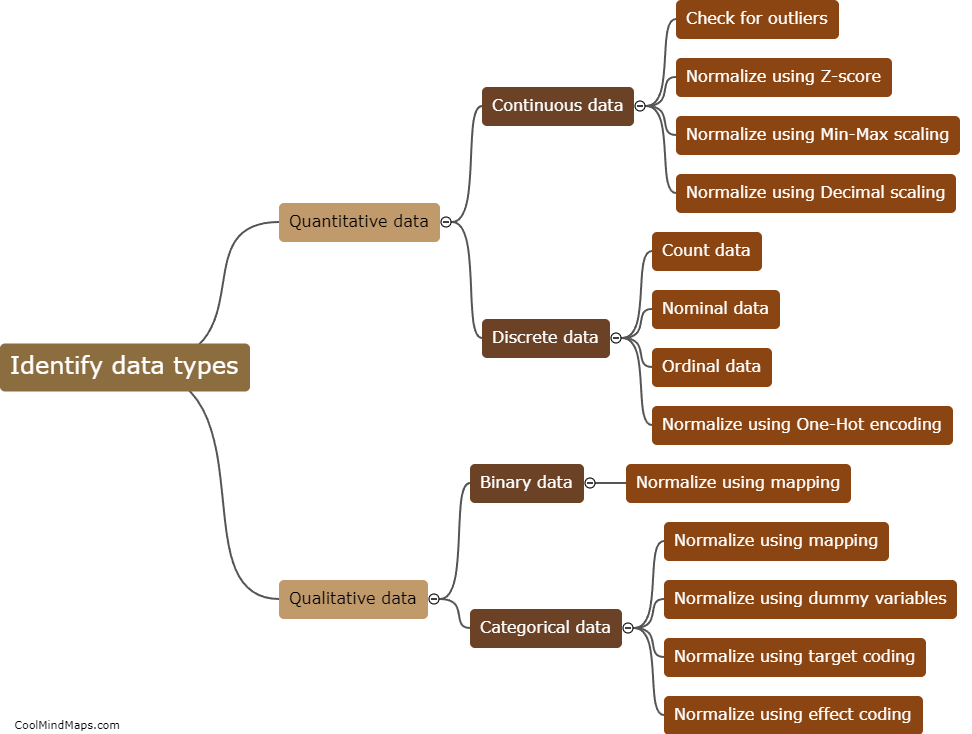

This mind map was published on 23 July 2023 and has been viewed 118 times.