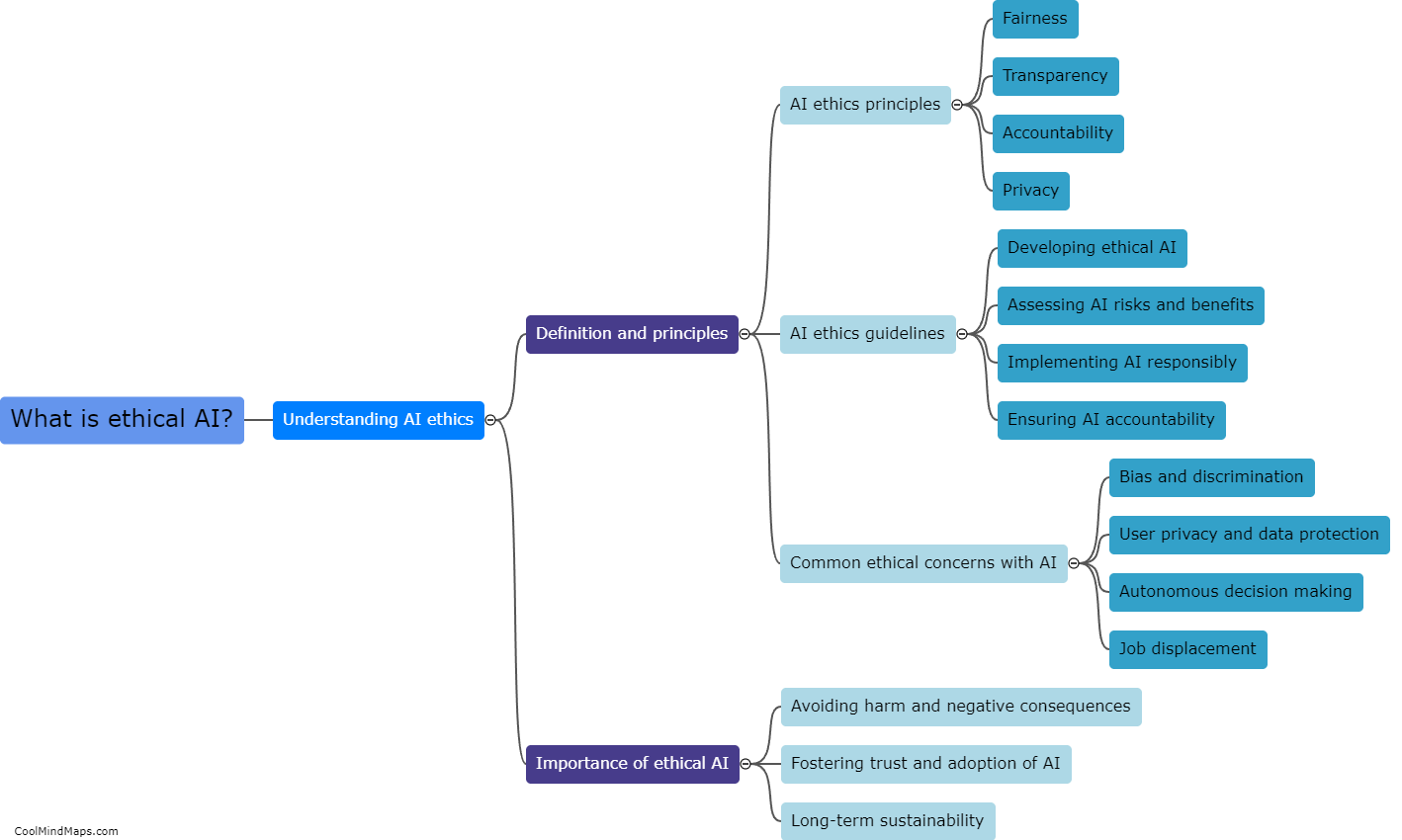

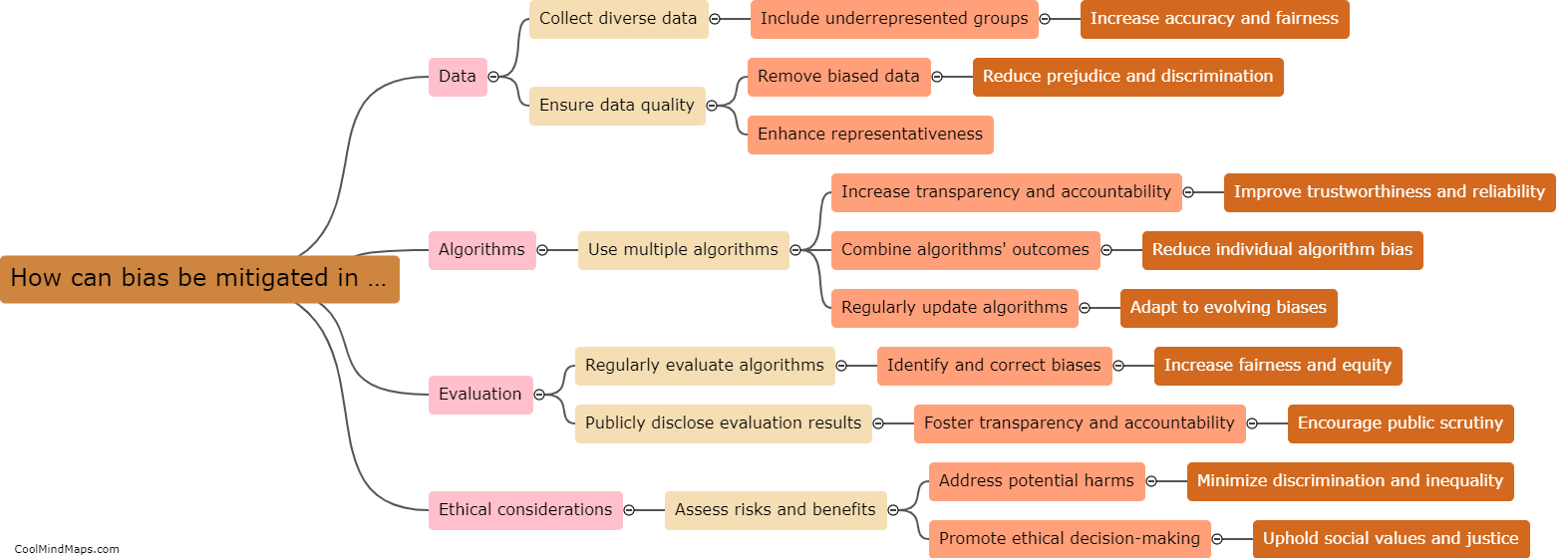

How can bias be mitigated in AI algorithms?

Bias in AI algorithms can be mitigated through various approaches. The first step is ensuring diverse and representative training data. Data should encompass different demographics, locations, and backgrounds to minimize the risk of bias. Another method is using carefully designed pre-processing techniques to remove any discriminatory information from the data. It is also essential to involve multidisciplinary teams during the algorithm development process, including individuals with diverse perspectives, to identify and address potential biases. Regular audits of AI systems can help identify and correct any bias that may arise over time. Lastly, organizations must promote transparency, accountability, and fairness in their AI systems, making sure that decision-making processes are explainable and fair to avoid reinforcing existing biases or creating new ones. By implementing these measures, bias in AI algorithms can be effectively reduced.

This mind map was published on 15 October 2023 and has been viewed 134 times.