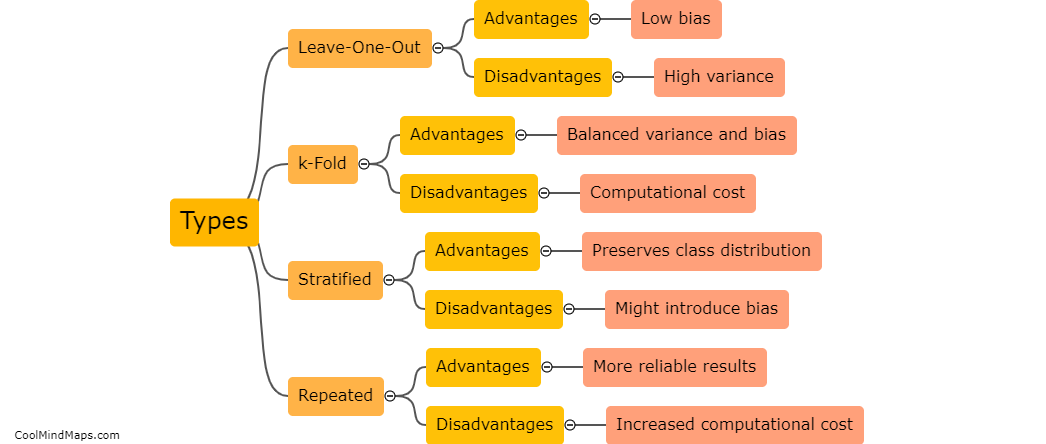

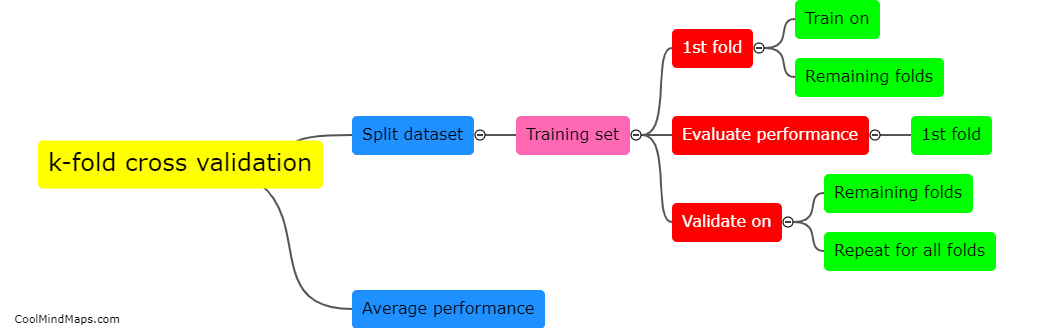

How does k-fold cross validation work?

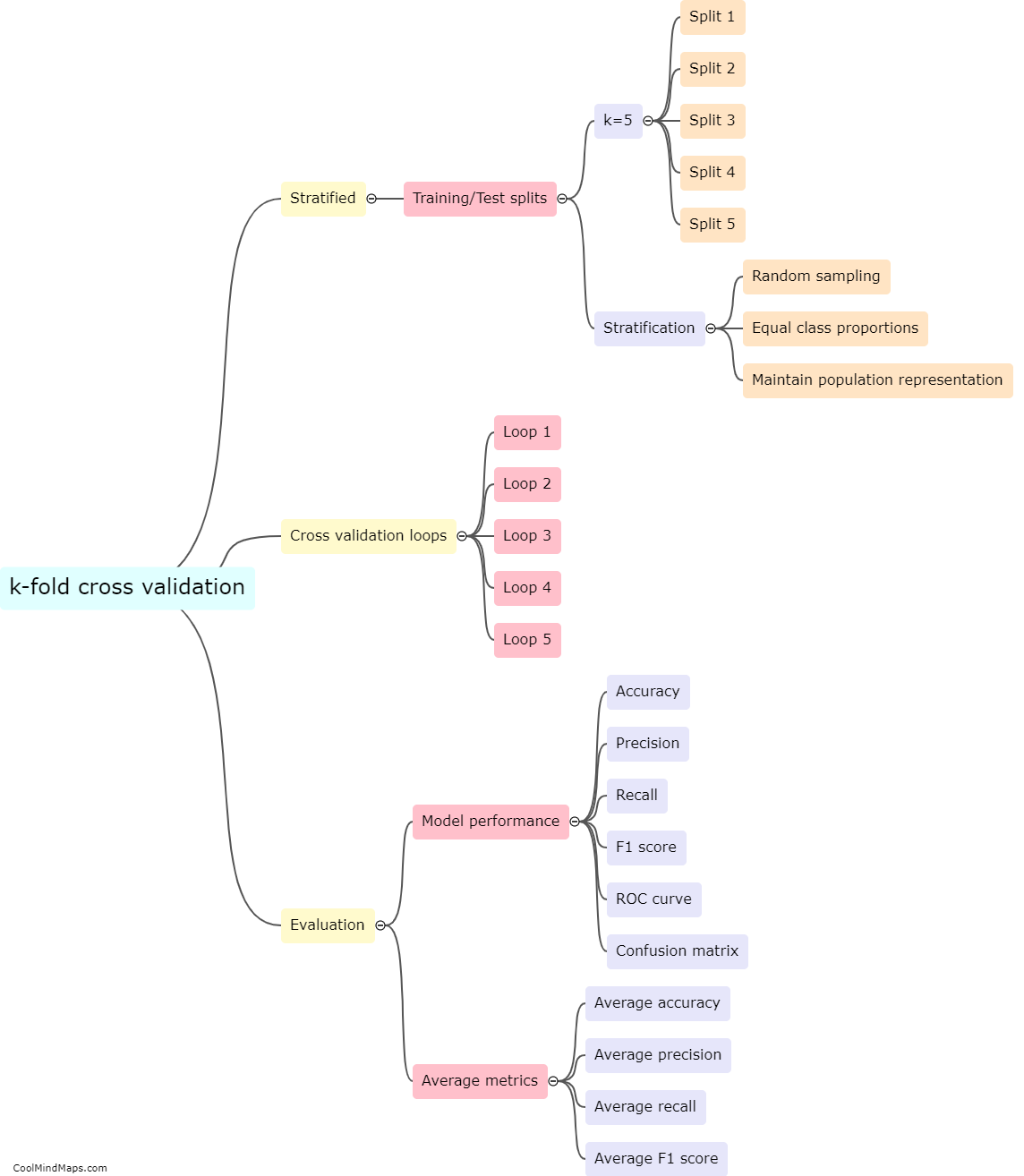

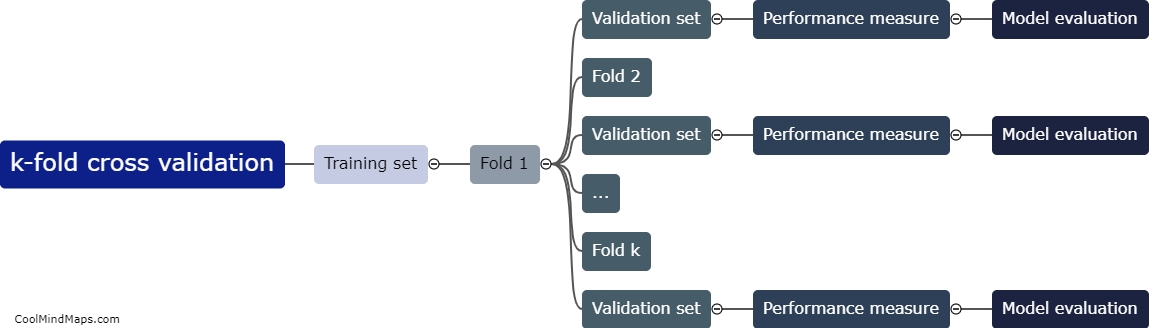

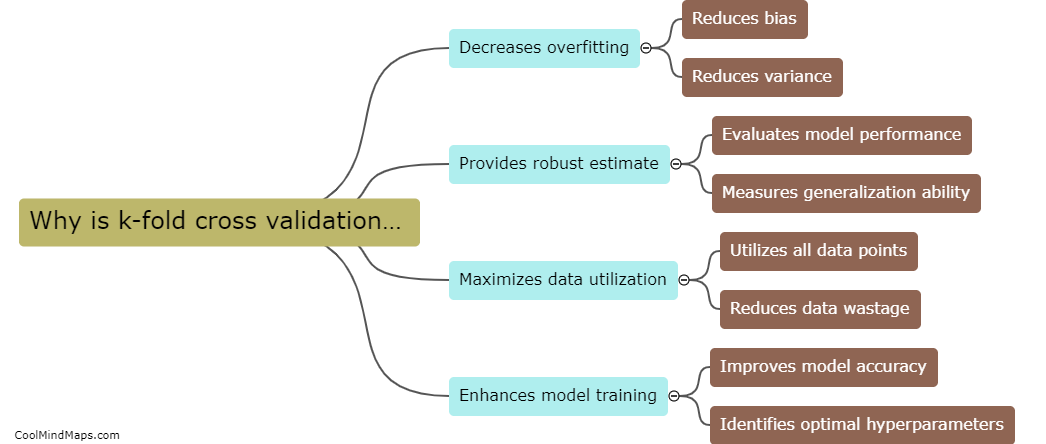

K-fold cross validation is a technique employed in machine learning to evaluate the performance and generalizability of a model. It involves splitting the available data into k equal-sized subsets or folds. The model is then trained k times, each time using k-1 folds as the training data and the remaining fold as the validation data. This process allows each data point to be used for validation exactly once. The performance metrics obtained from each iteration are averaged to assess the model's overall effectiveness. K-fold cross validation helps in estimating how well the model will perform on unseen data and aids in mitigating issues related to overfitting or underfitting of the model.

This mind map was published on 23 January 2024 and has been viewed 109 times.